9 Bayesian Clinical Trial Design

Example

A prototype Bayesian sequential design meeting multiple simultaneous goals will be discussed. The design includes selection of outcome variables and specification of honest effect sizes for computing Bayesian power and doesn’t take sample size calculations too seriously. The design has excellent Bayesian operating characteristics regardless of the number of data looks. The design has much more effective futility stopping than frequentist designs. This is especially important in rare diseases in which committing patients to long-term studies that are “negative” at the planned maximum sample size may deprive them of participating in other trials of more promising treatments.

Source: hbiostat.org/bayes/bet/design

Asking one to compute type I assertion probability α for a Bayesian design is like asking a poker player winning > $10M/year to justify his ranking by how often he places bets in games he didn’t win.

Do you want the probability of a positive result when there is nothing? Or the probability that a positive result turns out to be nothing?

Details in Section 9.10

9.1 Goals of a Therapeutic Trial

There are many goals of comparing treatments, including quantifying evidence for

- any efficacy

- non-trivial efficacy

- inefficacy

- harm

- similarity

- non-inferiority

and sometimes the goal is just to estimate the treatment effect, whatever it is. Bayesian methods provide probabilities that each of the above conclusions is correct, given the data, the data model, and the prior distribution for the treatment effect. For estimation, Bayes provides a complete probability distribution for the unknown effect, and corresponding interval estimates (highest posterior density intervals, credible intervals). With the enormous number of traditional frequentist clinical trials that reach their planned fixed sample size but have equivocal results, one particular advantage of Bayesian sequential designs is all-important: minimizing the chance of getting to the maximum sample size with no conclusion about efficacy.

A major advantage of Bayes is that it provides evidence in favor of assertions, not just evidence against a hypothesis. That explains why Bayesian designs and analyses are the same (other than the assertion whose probability is being computed) for all study goals. Only the required sample size may differ by the trial’s intent.

Another major advantage of Bayes is that since it involves probabilities about unknowns given the current data, it does not have multiplicity problems with sequential evidence assessment (Chapter 5). Evidence from current data are not down-weighted because the data were examined previously or because they will be examined in the future. Bayesian trial design need not have a sample size, and if it does, there is nothing wrong with exceeding that sample size when results are equivocal. This implies that Bayesian designs are less likely to result in a trial having an equivocal result. And the more often one analyzes the data the lower the expected sample size. One can stop as soon as there is a pre-specified amount of evidence for one of the conclusions listed above. When sample size N is discussed below, it can refer to a planned maximum sample size, an average sample size, the 75\(^\text{th}\) percentile of a distribution of sample sizes, etc.

With a Bayesian sequential design it is easy to add a requirement that the treatment effect be estimated with a specified precision. Often this precision (e.g., half-width of a credible interval) can be estimated before the trial starts. Then the precision-required sample size can define the time of the first data look.

9.2 Challenges

- Change conceptions about operating characteristics

- Primary OC must be the correctness of whatever decision is made

- Be willing to stop a study very early when there is a very low probability of non-trivial efficacy

- Whether to stop a study early for a conclusion of similarity of efficacy of the two treatments

- Abandon need for estimating treatment effect when there is ample evidence for inefficacy

- Be objective and formal about interpretation of effects by specifying a threshold for non-trivial effect

- Removing cost from consideration of effect sizes to detect in favor of finding an outcome variable that will have rich enough information to achieve power with clinically-chosen effect size

- Regulators and sponsors must recognize that the operating characteristic that needs to be optimized is the correctness of whatever decision is made about treatment effectiveness.

- Sponsors must be willing to stop studies very early when there is strong evidence of inefficacy. This will free up patients with rare diseases for other trials, and free up resources likewise.

- To be able to stop studies very early so as to not waste resources when the probability is very high that there is no non-trivial efficacy, sponsors must jettison the need to be able to estimate how ineffective a treatment is.

- At present, consideration of whether a nonzero treatment effect is trivial is highly subjective and informal. To make optimal and defensible decisions, this must be formalized by specifying a threshold for non-triviality of effect. Importantly, this threshold is smaller than the MCID.

- The current practice of choosing a more than clinically important effect size to use in power calculations has been called “sample size samba” and has resulted in a scandalous level of overly optimistically designed trials, commonly having an equivocal result. Many equivocal trials observed an effect that was merely clinically significant. Powering the study to only detect a “super clinically significant” effect results in low actual power and wasted resources. Objectively choosing effect sizes without cost considerations, then abandoning studies that cannot find an acceptable outcome variable that has sufficient resolution to achieve adequate power with a “real” effect size will prevent a large amount of waste and make clinical trials more ethical while freeing up patients with rare diseases to participate in other trials.

9.3 Bayesian Operating Characteristics

A decision maker is judged by their ability to make the correct decision with all the information that was available to them at the moment that the decision was made. Outside of frequentist null hypothesis significance testing, we never judge a decision maker by their oracle abilities, e.g., by counting how often they make a judgment that only an oracle could know is wrong.

Frequentist operating characteristics related to decision making (i.e., not to estimation) involve separate consideration of two data generating mechanisms: type I (setting treatment effect to zero to compute \(\alpha\)) and type II (setting treatment effect to a nonzero value to detect to compute power). The frequentist paradigm does not allow consideration of the probability that the decision is correct but instead deals with the probability that a certain decision will be made under a certain single treatment effect. For example, \(\alpha\) is the probability that a decision to consider the treatment beneficial will be triggered when the treatment is ignorable. On the other hand, Bayesian decision-related operating characteristics deal directly with the probability that a specific decision is correct, e.g., the proportion of times that a decision that the treatment is beneficial is correct, i.e., that the data on which the decision was made were generated by a truly beneficial treatment. The complement of this proportion leads to the error probability, i.e., the probability that a treatment has no effect or causes harm when the Bayesian posterior probability triggered a decision that it was beneficial.

The primary Bayesian operating characteristic is how often a conclusion is correct. The primary frequentist operating characteristic is how often one reaches a conclusion.

Bayesian inference allows one to make both positive (“the drug works”) and negative (“the drug doesn’t work”) conclusions. Evaluation of Bayesian operating characteristics can focus on either the probability that whatever decision is made is correct, or the correctness of a single decision such as a drug works. Frequentism based on null hypothesis testing and P-values only brings evidence against a hypothesis, so the only possible actions are (1) to reject \(H_0\) (the one actual conclusion a frequentist approach can make), (2) get more data, or (3) stop with no conclusion except a failure to reject \(H_0\). The operating characteristic given greatest emphasis by regulators has been type I assertion probability \(\alpha\), the probability of rejecting \(H_0\) when it is magically known to be true.

When designs are being formulated and when N is being selected, researchers need to know that the typical way the trial is likely to unfold is acceptable. The performance of a proposed Bayesian design must be judged by its Bayesian operating characteristics (OCs). Bayes is about prospective analysis and interpretation, i.e., trying to uncover the unknown based on what is known. It minimizes the use of unobservables in acting in forward information-flow predictive mode. Frequentist OCs deal with what may happen under unknowable assumptions about the treatment effect generating the data. Bayesian OCs are the only OCs important when running Bayesian analyses, interpreting the results, and knowing how much to trust them. A key point is that accuracy of conclusions pertains to what has been learned from data, not what data may arise under certain unknowable assumptions about effects. Accuracy is a post-data consideration.

There are three classes of Bayesian OCs:

- OCs that provide design objectives but play no role in analysis or interpretation of results

- OCs used to ensure that when a decision is made using data it has a high probability of being the correct decision

- precision, e.g., expected width of a 0.95 highest posterior density uncertainty interval for a treatment effect when the simulated study did not stop early for inefficacy

9.3.1 Bayesian OCs Providing Only Design Objectives

In the first class of OCs are two types of Bayesian OCs, which are related to each other: assurance/power and expected sample size. Assurance is the ability of the design to detect a real treatment effect were it to exist. This is Bayesian power (the chance of achieving a high posterior probability of efficacy if there is efficacy). As introduced in Section 1.3, define the following probability distributions:

- \(a\): pre-specified analysis prior for the treatment effect parameter

- \(s\): the simulation/sampling prior or prior of an empowered critic of the study design

- \(a_e\): the analysis prior restricted to the domain of effective treatment effects that one would not want to miss; this is typically the uncertainty distribution for the MCID (minimal clinically important difference)

Bayesian power is for example \(P(P(d > 0, data, a) > 0.95, a_{e})\) when \(d\) is the treatment effect for which we are assuming that positive values indicate benefit to the patient. The notation indicates that we estimate the probability over a large number of simulations that the posterior probability of efficacy exceeds 0.95 when the true unknown treatment effect has the MCID uncertainty distribution \(a_e\). Clinical trials are simulated over a universe of \(d\) values defined by the distribution \(a_e\). One example of \(a_e\) might be a uniform interval from MCID - 10% to MCID + 10% where MCID is the (usually very subjective) current best guess as the minimum amount of effectiveness that would benefit patients.

The expected sample size is the average over a large number of simulations of the number of observations at the first moment that any of the stopping rules were satisfied. The simulations are done over the whole universe of effect sizes given by the analysis prior \(a\).

9.4 Bayesian OCs For Correctness of Decisions

Once any data have been accumulated and analyzed, the all-important OC involves judgment of the correctness of a decision at the moment that decision is made. Bayesian approaches optimize the chance of conclusions being correct if the following three conditions hold:

- the variables being analyzed are measured unbiasedly

- the data model is consistent with the experimental design and is reasonably well-fitting

- the prior used in the analysis is not in major conflict with the prior used by the judge of the accuracy of the study results

Quantifying correctness of conclusions for a Bayesian design is equivalent to assessing predictive accuracy, which involves examining what’s real as a function of predicted values. Correctness of conclusions (predictions) is easy to check in a simulation study, as explained in detail in Section 9.10. The process involves looking back to the treatment effect that generated the current trial data after the decision about the treatment effect has been made. After a large number of trials are simulated, the subset of trials reaching a certain conclusion is chosen, and for that subset the proportion of trials for which the true treatment effect is consistent with the conclusion is computed.

In actual studies most of the attention about this most important OC is focused on the choice of the prior distribution. When the prior is acceptable, the Bayesian procedure will result in optimum decisions in the eye of the beholder of the prior, by definition. Sensitivity of decisions to the choice of a prior would be a useful assessment at the design stage.

See the tab below for more detail about simulating the probability of correctness of decisions. The simulations involve estimating error probabilities such as the probability the treatment is actually inefficacious (no benefit or is harmful) when the Bayesian evidence for effectiveness is conclusive, i.e., \(P(d < 0 | P(d > 0 | \text{data}, a) > 0.95, s)\), which is the probability that the treatment is ineffective (or worse) when we obtain a high probability that it is effective under the analysis prior \(a\), but when the simulation drew treatment effects from the sampling prior \(s\) that doesn’t exactly match \(a\). It is interesting to note that, as examplified in the simulation appearing later in this chapter, even when there is a disagreement in some parts of posterior distributions when computed under conflicting priors, the probability that a decision is correct can remain quite high.

In Chapter 5 Bayesian sequential analysis is studied in more detail, though the simulations there are simple from the standpoint of having only one reason for stopping. In that situation, one stops, for example, when a posterior probability PP exceeds 0.95. When the simulation is done using the same prior as the prior used in the analysis, if the PP is 0.96 upon stopping, the true probability of a treatment effect will be exactly 0.96. Trusting the Bayesian results at the decision point requires the PP being well calibrated. This happens when there is no major conflict between the design prior and the analysis prior.

An operating characteristic (OC) is a long-run property of a statistical quantity. In frequentist statistics, “long-run” means over an infinite sequence of repetitions of an experiment (infinitely many data samples), for which a quantity of interest (e.g., a true mean difference between treatments) is fixed at a single number such as zero (null value, for computing \(\alpha\)) or at a minimum clinically important difference (MCID, a nonzero effect, for computing \(\beta\) = 1 - power). A frequentist simulation involves sampling repeatedly with a single assumed effect, whereas a Bayesian simulation will not have any two repetitions with the same effect size. The task of frequentist inference is to bring evidence against an assumed effect value, and the task of Bayesian inference is to uncover the true unknown effect that generated the data at hand, whatever that effect is. Like p-values, frequentist simulations involve hypothetical unobservable quantities (true treatment effect) whereas Bayesian simulations primarily involve only observables, as effects are generated over an entire smooth distribution of allowable effect values.

Frequentist OCs are \(\alpha\), \(\beta\), bias, precision, confidence interval coverage, and required sample sizes to achieve a given \(\beta\). Only precision (e.g., standard error or half-width of a confidence interval) or confidence interval coverage can be computed without setting unobservable quantities to constants. Occasionally a sponsor desires to use Bayesian methods as substitutes for traditional frequentist methods. Two examples are

- One may obtain more accurate confidence intervals in certain non-normal situations, where traditional asymptotic confidence intervals are not very accurate, by using Bayesian posterior distributions with non-informative priors.

- A sequential or complex adaptive design may not be able to provide conventional p-values, yet the sponsor still wishes to use a frequentist framework.

In such cases it is incumbent upon the sponsor to demonstrate accurate frequentist OCs. In other cases, a sponsor intends to use a pure Bayesian procedure, but the sponsor and the FDA disagree on the choice of a prior distribution. In such cases, it may be reasonable for the FDA to ask the sponsor to demonstrate that the Bayesian procedure yields an acceptable \(\alpha\) (although it may be more straightforward for the FDA to just emphasize their own analysis using their prior).

In the remainder of cases, for which the sponsor intends to use an all-Bayesian analysis and adding frequentist interpretations to the Bayesian result will add confusion to the more direct evidence quantification offered by Bayes. For a completely Bayesian analysis, Bayesian OCs are the relevant ones. The most important Bayesian OC is the probability that the correct decision is made when the study ends. Bayesian power and assurance are other important Bayesian OCs, as well as the width of the Bayesian highest posterior density interval or credible interval at the planned maximum sample size. Bayesian power can be defined as the probability that the posterior probability will achieve or exceed a threshold (e.g., 0.95 or 0.975) at the planned sample size, under an assumption that the actual treatment effect is at the MCID. Assurance deals with the more realistic case where uncertainty in MCID is allowed, by using a distribution instead of a point for MCID.

Consider decisions based on posterior probabilities, which are probabilities that assertions about unknown parameters are true given the prior and the current data. Denote a posterior probability of interest as \(P(... | a, \text{data})\) where … is an assertion about treatment effectiveness or safety (e.g., the reduction in blood pressure is positive), data denotes the current data, and \(a\) is the prior distribution assumed for the evidence assessment (analysis prior). Later we drop data from the conditioning just to simplify notation, as all posterior probabilities are conditional on current data.

The primary Bayesian OC is the probability that an assertion is true, which will be exactly the computed posterior probability of the assertion when viewed from the standpoint of the prior used in the Bayesian analysis and when the assumed data distribution is correct (the latter is also required for frequentist inference). When the prior \(a\) is settled upon once and for all through a collaboration between the sponsor and FDA, there is no need to simulate Bayesian OCs except to show adequate sensitivity to detect clinically important effects (Bayesian power). When there is a significant conflict between the prior used in the analysis and the prior of an outside observer, the primary Bayesian OC will not be as stated. To evaluate the OC as seen by the outside observer, let \(s\) be that observer’s prior. The impact of the difference between \(s\) and the analysis prior \(a\) is evaluated by computing (under prior \(s\)) OCs for posterior probabilities computed under \(a\). Simulations can compute this by choosing various sampling priors \(s\) that include the observer’s prior.

Examples of primary Bayesian OCs are as follows, where \(p\) denotes a decision threshold for a posterior probability (e.g., 0.95 or 0.975), \(d\) denotes the true unknown treatment effect, \(\epsilon\) denotes the threshold for similarity of effects of two treatments, and \(a\) and \(s\) are the analysis and sampling priors, respectively.

- Accuracy of evidence for benefit as judged by \(s\): \(P(d > 0 | s, P(d > 0 | a) > p)\)

- Accuracy of evidence for harm: \(P(d < 0 | s, P(d < 0 | a) > p)\)

- Accuracy of evidence for similarity: \(P(|d| < \epsilon | s, P(|d| < \epsilon | a) > p)\)

- Accuracy of evidence for non-inferiority: \(P(d > -\epsilon| s, P(d > -\epsilon | a) > p)\)

To estimate the first OC above, the probability that a treatment judged to be beneficial in the analysis is in fact beneficial when judged by a reviewer, one simulates a large number of trials under a specified distribution of \(d\) (\(s\), the sampling prior distribution for \(d\)), takes the subset of trials for which the analysis prior yields \(P(d > 0 | a) > p\), and computes the fraction of this subset of trials that were truly generated with \(d > 0\). This estimates the probability of correctness of an assertion of benefit, the most important property for Bayesian posterior inference.

Accuracy of conclusions of a Bayesian analysis come from the prior distribution as well as the same features required by frequentist inference: an adequately fitting data model, appropriate experimental design, and having unbiased patient measurements. Considering what is unique about Bayes, the primary OCs follow strictly from the prior. Results will be more sensitive to the prior when the sample size is small or in a large study when one is conducting an early data look that makes the sample size effectively small. When the sampling distribution for \(d\) equals the analysis prior, no simulations are needed to show primary Bayesian OCs as they are guaranteed to be what is claimed when \(a=s\). For example,

- \(P(d > 0 | a, P(d > 0, a) > p) = p^*\)

- \(P(d > -\epsilon | a, P(d > 0, a) > p) = p^*\)

where \(p^*\) is the average posterior probability (using the analysis prior) when that probability exceeds \(p\). \(p^*\) must exceed \(p\).

For example, if using a posterior threshold of 0.95 for evidence of effectiveness, more than 0.95 of the subset of simulated samples having a posterior probability of benefit greater than 0.95 will actually be found to have been generated by positive values of \(d\).

When there is solid agreement between sponsors and regulators about the prior, simulated Bayesian OCs may not always be required, though they are excellent tools for validating coding and Bayesian MCMC algorithms. But in most cases the sensitivity of Bayesian OCs to the choice of priors should be simulated1. By having various departures of the analysis prior from the sampling prior, one can learn how easy it is for the accuracy of conclusions to fall below an acceptable level. For example, one may determine that \(P(d > 0)\) is 0.985 in the simulated trials where the posterior probability exceeds 0.975, when the sampling and analysis priors align. When the priors are even strongly misaligned, unless the sample size is very small, the accuracy of conclusions of treatment benefit is still likely to be above a quite acceptable level of 0.9 even though it is below the ideal level of 0.9852.

2 Note that posterior probabilities of any efficacy are not as affected by the prior as probabilities of large efficacy.

1 As an aside, some sponsors may wish to base sample size calculations on insensitivity to the choice of priors

9.5 Example: Two-Treatment Parallel-Group Efficacy Design

Goals

- Maximize the probability of making the right decision, with 4 decisions possible (efficacy, similarity, inefficacy, not possible to draw any conclusion with available resources)

- Minimize the probability of an inconclusive result at study end

- Minimize the expected sample size

- Stop as early as possible if there is a very high probability that the treatment’s effect is trivial or worse, even if the magnitude of the effect cannot be estimated with any precision

- If stopping early for non-trivial efficacy, make sure that the sample size provides at least crude precision of the effect estimate

- Do this by not making the first efficacy look until the lowest sample size for which at least crude precision of the treatment effect is attained

- Avoid gaming the effect size used in a power calculation

- Recognize that traditional sample sizes are arbitrary and require too many assumptions

- Recognize that what appears to be a study extension to one person will appear to be an interim analysis to another who doesn’t need to do a sample size calculation

- Build clinical significance into the quantification of evidence for efficacy

Definitions

- \(\Delta\): true treatment effect being estimated

- \(\delta\): minimum clinically important \(\Delta\)

- \(\gamma\): minimum detectable \(\Delta\) (threshold for non-trivial treatment effect, e.g. \(\frac{1}{3}\delta\))

- SI: similarity interval for \(\Delta\), e.g., \([-\frac{1}{2}\delta, \frac{1}{2}\delta]\)

- \(N\): average sample size, used for initial resource planning. This is an estimate of the ultimate sample size based on assuming \(\Delta = \delta\). \(N\) is computed to achieve a Bayesian power of 0.9 for efficacy, i.e., achieving a 0.9 probability at a fixed sample size that the probability of any efficacy exceeds 0.95 while the probability of non-trivial efficacy exceeds 0.85, i.e., \(P(\Delta > 0) > 0.95\) and \(P(\Delta > \gamma) > 0.85\).

- More than one \(N\) can be computed, e.g., \(N\) such that if \(\Delta = \gamma\) there is ever a high probability of stopping for inefficacy

- \(N_p\): minimum sample size for first efficacy assessment, based on required minimum precision of estimate of \(\Delta\)

- Probability cutoffs are examples

- This design does not require a separate futility analysis, as inefficacy subsumes futility

- Below, “higher resolution Y” can come from breaking ties in the outcome variable (making it more continuous), adding longitudinal assessments, or extending follow-up

Example operating characteristics for this design are simulated in Section 9.10.

- Bayesian inference opens up a wide variety of adaptive trials

- stat methods need no adaptations

- only sample size and Bayesian power calculations are complicated

- But here consider non-adaptive trials with possible sequential monitoring

9.6 Sample Size Estimation

- In a sense more honest than frequentist b/c uncertainty admitted

- SDs

- effect size

- Whitehead et al. (2015): sample size calculation for control vs. set of active tx

- goal: high PP that ≥ 1 tx better than control or

- high PP that none is better

- Other good references: Kunzmann et al. (2021), Spiegelhalter et al. (1993), Grouin et al. (2007), Spiegelhalter & Freedman (1986), Adcock (1997), Joseph & Bélisle (1997), Pezeshk & Gittins (2002), Simon & Freedman (1997), Wang et al. (2005), Braun (n.d.), this

- Spiegelhalter et al. (1993) has clear rationale for unconditional power integrating over prior

- “realistic assessment of the predictive probability of obtaining a ‘significant’ result”

- discusses damage caused by using fixed optimistic treatment effect

- Uebersax has an excellent article on Bayesian unconditional power analysis which also discusses the hazards of choosing a single effect size for power estimation

9.7 Bayesian Power and Sample Size Examples

- Example notion of power: probability that PP will exceed a certain value such as 0.95 (Bayesian assurance)

- Compute as a function of the unknown effect, see which true effects are detectable for a given sample size

- If the minimum clinically important difference (MCID) is known, compute the success probability at this MCID

- Better: take uncertainty into account by sampling the MCID from an uncertainty (prior) distribution for it, using a different value of MCID for each simulated trial. The Bayesian power (probability of success) from combining all the simulations averages over the MCID uncertainties. This is demonstrated in a time-to-event power calculation below.

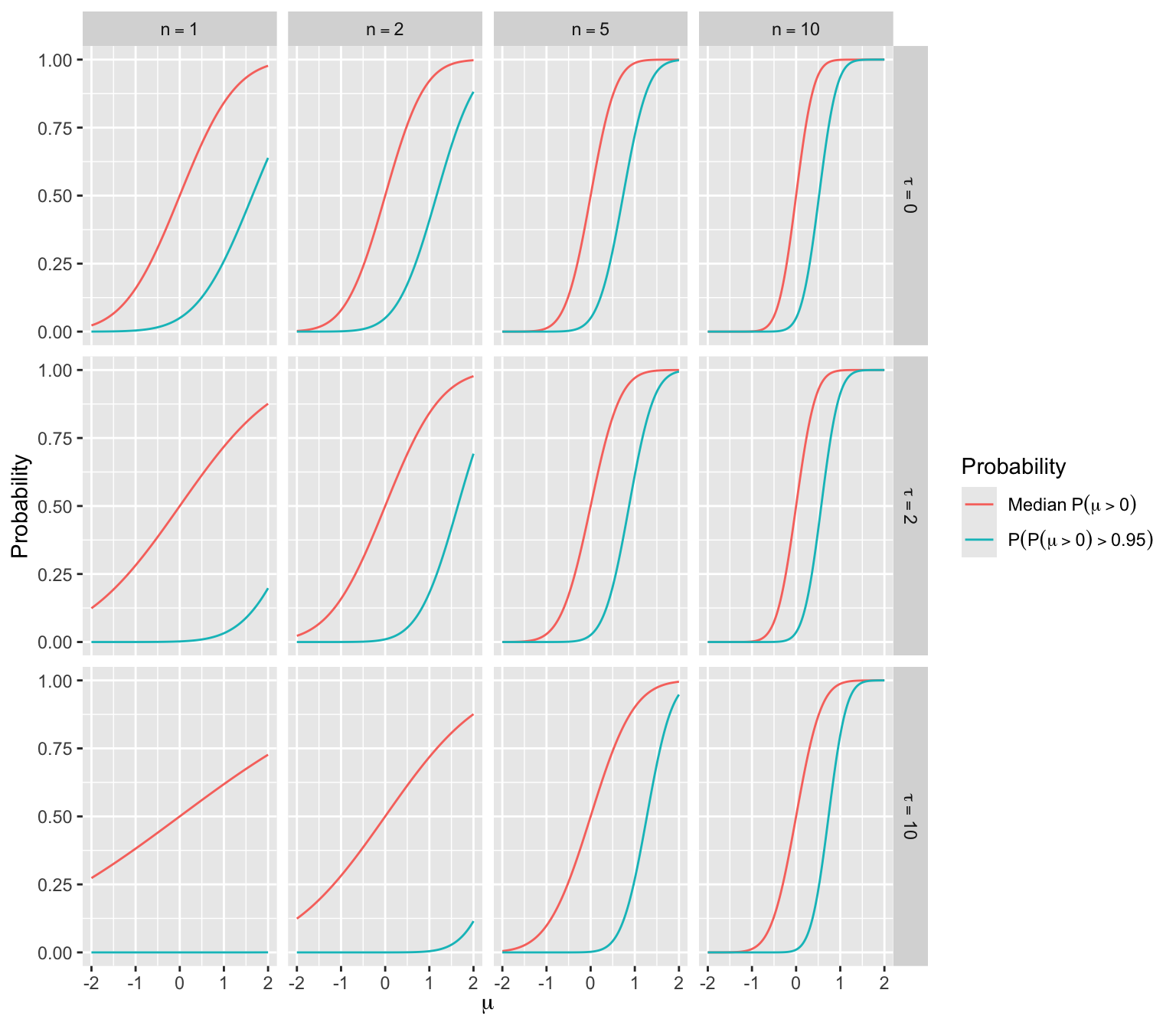

9.7.1 One-Sample Mean Example

- Consider earlier one-sample mean problem

- Data are normal with variance 1.0 and the prior is normal with mean zero and variance \(\frac{1}{\tau}\)

- Take \(\mu > 0\) to indicate efficacy

- PP \(= P(\mu > 0 | \textrm{data}) = \Phi(\frac{n \overline{Y}}{\sqrt{n + \tau}})\)

- \(\overline{Y}\) is normally distributed with mean \(\mu\) and variance \(\frac{1}{n}\)

- Long-run average of \(\overline{Y}\) is \(\mu \rightarrow\) long-run median of \(\overline{Y}\) is also \(\mu \rightarrow\) median PP \(= \Phi(\frac{n \mu}{\sqrt{n + \tau}})\)

- Let \(z = \Phi^{-1}(0.95)\)

- Chance that PP exceeds 0.95 is \(P(\Phi(\frac{n \overline{Y}}{\sqrt{n + \tau}}) > 0.95) = P(\frac{n \overline{Y}}{\sqrt{n + \tau}} > z) = \Phi(\frac{n \mu - z \sqrt{n + \tau}}{\sqrt{n}})\)

- For varying \(n\) and \(\tau\) the median PP and the Bayesian power are shown in the figure

Code

d <- expand.grid(mu=seq(-2, 2, length=200),

n=c(1, 2, 5, 10),

tau=c(0, 2, 10),

what=1:2)

d <- transform(d,

p = ifelse(what == 1,

pnorm(n * mu / sqrt(n + tau)),

pnorm((n * mu - qnorm(0.95) * sqrt(n + tau)) / sqrt(n))),

n = factor(n),

tau = factor(tau)

)

d$Probability <- factor(d$what)

levels(d$n) <- paste0('n==', levels(d$n))

levels(d$tau) <- paste0('tau==', levels(d$tau))

ggplot(d, aes(x=mu, y=p, col=Probability)) + geom_line() +

scale_color_discrete(labels=expression(Median~P(mu > 0),

P(P(mu > 0) > 0.95))) +

facet_grid(tau ~ n, labeller=label_parsed) +

xlab(expression(mu)) +

ylab('Probability')

9.7.2 Sample Size for Comparing Time to Event

In this example we compute the sample size needed to achieve adequate Bayesian power to achieve evidence for various assertions. The treatment effect is summarized by a hazard ratio (HR) in a proportional hazards (PH) model. Assertions for which the study needs to have the ability to provide conclusive evidence include

- any reduction of hazard of an event (HR < 1)

- HR < 0.98, 0.96, 0.94, …

- harm or trivial benefit (HR > 0.95)

The trial’s actual analysis might use a flexible parametric PH model implemented in the rstanarm Bayesian survival analysis package. Bayesian operating characteristics are simulated here using the Cox PH model.

Take Bayesian power to be the probability that the posterior probability will exceed 0.975 when the true HR is a constant3. For the first two types of assertions, set the HR generating the data to 0.75 (assumed minimally clinically important difference), and for the third type of assertion set HR to 1.25.

3 Ideally one would consider power over a distribution of HRs as done below.

The actual analysis to be used for the clinical trial should adjust for covariates, but covariates are ignored in our simulations. Bayesian power will increase with the addition of covariates.

If the treatment groups are designated A (standard of care) and B (new treatment), suppose that the following Weibull survival distribution has been found to fit patient’s experiences under control treatment.

\[S(t) = e^{- 0.9357 t^{0.5224}}\] The survival curve for treatment B patients is obtained by multiplying 0.9357 by the B:A hazard ratio (raising \(S(t)\) to the HR power).

Assume further that follow-up lasts 3y, 0.85 of patients will be followed a full 3y, and 0.15 of patients will be followed 1.5y when the final analysis is done4.

4 The R Hmisc package spower function will simulate much more exotic situations.

To compute Bayesian power, use the normal approximation to the Cox partial likelihood function, i.e., assume that log(HR) is normal and use standard maximum likelihood methods to estimate the mean and standard deviation of the log(HR). The Cox log-likelihood is known to be very quadratic in the log HR, so the normal approximation will be very accurate. For a prior distribution assume a very small probability of 0.025 that HR < 0.25 and a probability of 0.025 that it exceeds 4. The standard deviation for log(HR) that accomplishes this is \(\frac{\log(4)}{1.96} = 0.7073 = \sigma\).

With a normal approximation for the data likelihood and a normal prior distribution for log(HR), the posterior distribution is also normal. Let \(\theta\) be the true log(HR) and \(\hat{\theta}\) be the maximum likelihood estimate of \(\theta\) in a given simulated clinical trial. Let \(s\) be the standard error of \(\hat{\theta}\)5.

5 Note that in a proportional hazards model such as the one we’re using, the variance of \(\hat{\theta}\) is very close to the sum of the reciprocals of the event frequencies in the two groups.

Let \(\tau = \frac{1}{\sigma^{2}} = 1.9989\) and let \(\omega = \frac{1}{s ^ {2}}\). Then the approximate posterior distribution of \(\theta\) given the data and the prior is then normal with mean \(\mu\) and variance \(v\) where \(v = \frac{1}{\tau + \omega}\) and \(\mu = \frac{\omega \hat{\theta}}{\tau + \omega}\).

The posterior probability that the true HR < \(h\) is the probability that \(\theta < \log(h)\), which is \(\Phi(\frac{\log(h) - \mu}{\sqrt{v}})\).

Simulation

First consider code for generating outcomes for one clinical trial of size \(n\) per group, follow-up time cutoff \(u\), secondary cutoff time \(u2\) with probability \(pu2\), and true hazard ratio \(H\). The result is posterior mean and variance and the P-value from a frequentist likelihood ratio \(\chi^2\) test with the Cox PH model.

Code

require(data.table)

require(ggplot2)

require(rms)

require(survival)

sim1 <- function(n, u, H, pu2=0, u2=u, rmedian=NA) {

# n: number of pts in each group

# u: censoring time

# H: hazard ratio

# pu2: probability of censoring at u2 < u

# rmedian: ratio of two medians and triggers use of log-normal model

# Generate failure and censoring times for control patients

# Parameters of a log-normal distribution that resembles the Weibull

# distribution are below (these apply if rmedian is given)

mu <- -0.2438178

sigma <- 1.5119236

ta <- if(is.na(rmedian))

(- log(runif(n)) / 0.9357) ^ (1.0 / 0.5224)

else exp(rnorm(n, mu, sigma))

ct <- pmin(u, ifelse(runif(n) < pu2, u2, u))

ea <- ifelse(ta <= ct, 1, 0)

ta <- pmin(ta, ct) # apply censoring

# Generate failure and censoring times for intervention patients

tb <- if(is.na(rmedian))

(- log(runif(n)) / (H * 0.9357)) ^ (1.0 / 0.5224)

else exp(rnorm(n, mu + log(rmedian), sigma))

ct <- pmin(u, ifelse(runif(n) < pu2, u2, u))

eb <- ifelse(tb <= ct, 1, 0)

tb <- pmin(tb, ct)

ti <- c(ta, tb)

ev <- c(ea, eb)

tx <- c(rep(0, n), rep(1, n))

f <- if(is.na(rmedian)) coxph(Surv(ti, ev) ~ tx) else

survreg(Surv(ti, ev) ~ tx, dist='lognormal')

chi <- 2 * diff(f$loglik)

theta.hat <- coef(f)[length(coef(f))]

s2 <- vcov(f)['tx', 'tx']

tau <- 1.9989

omega <- 1.0 / s2

v <- 1.0 / (tau + omega)

mu <- omega * theta.hat / (tau + omega)

if(! all(c(length(mu), length(v), length(chi))) == 1) stop("E")

list(mu = as.vector(mu),

v = as.vector(v),

pval = as.vector(1.0 - pchisq(chi, 1)))

}Simulate all statistics for \(n=50, 51, ..., 1000\) and HR=0.75 and 1.25, with 10 replications per \(n\)/HR combination.

Code

HR <- 0.75

ns <- seq(50, 1000, by=1)

R <- expand.grid(n = ns, HR=c(0.75, 1.25), rep=1 : 10)

setDT(R) # convert to data.table

g <- function() {

set.seed(7)

R[, sim1(n, 3, HR, 0.15, 1.5), by=.(n, HR, rep)]

}

S <- runifChanged(g, R, sim1, file='wsim.rds')The number of trials simulated for each of the two HR’s was 9510.

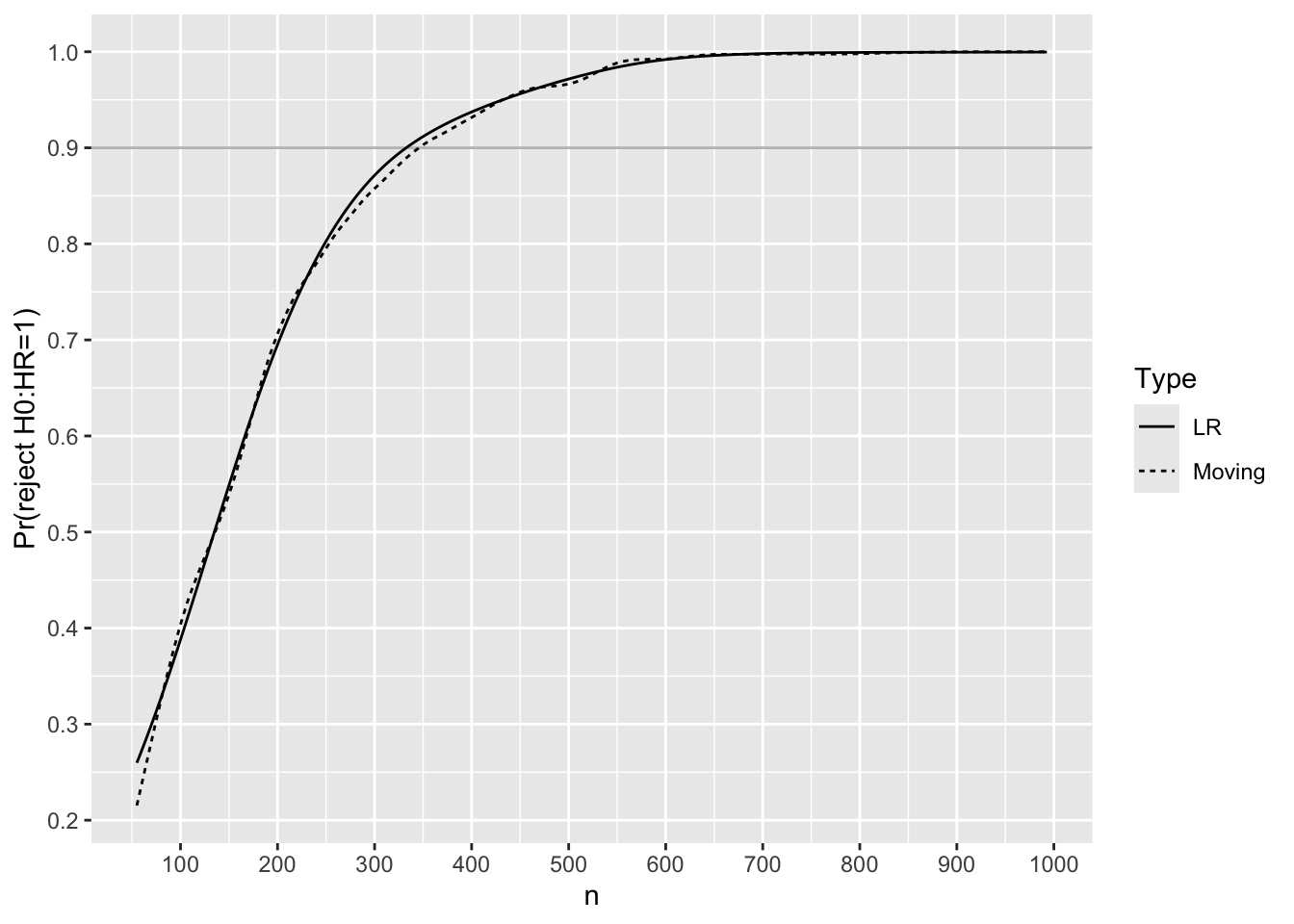

Before simulating Bayesian power, simulate frequentist power for the Cox PH likelihood ratio \(\chi^2\) test with two-sided \(\alpha=0.05\). Effect size to detect is HR=0.75.

Code

R <- S[HR == 0.75, .(reject = 1 * (pval < 0.05)), by=n]

# Compute moving proportion of trials with p < 0.05

# Use overlapping windows on n and also logistic regression with

# a restricted cubic spline in n with 5 knots

m <- movStats(reject ~ n, eps=100, lrm=TRUE,

data=R, melt=TRUE)

ggplot(m,

aes(x=n, y=reject, linetype=Type)) + geom_line() +

geom_hline(yintercept=0.9, alpha=0.3) +

scale_x_continuous(breaks=seq(0, 1000, by=100)) +

scale_y_continuous(breaks=seq(0, 1, by=0.1),

minor_breaks=seq(0, 1, by=0.05)) +

ylab('Pr(reject H0:HR=1)')

Use linear interpolation to solve for the sample size needed to have 0.9 frequentist power.

Code

lookup <- function(n, p, val=0.9)

round(approx(p, n, xout=val)$y)

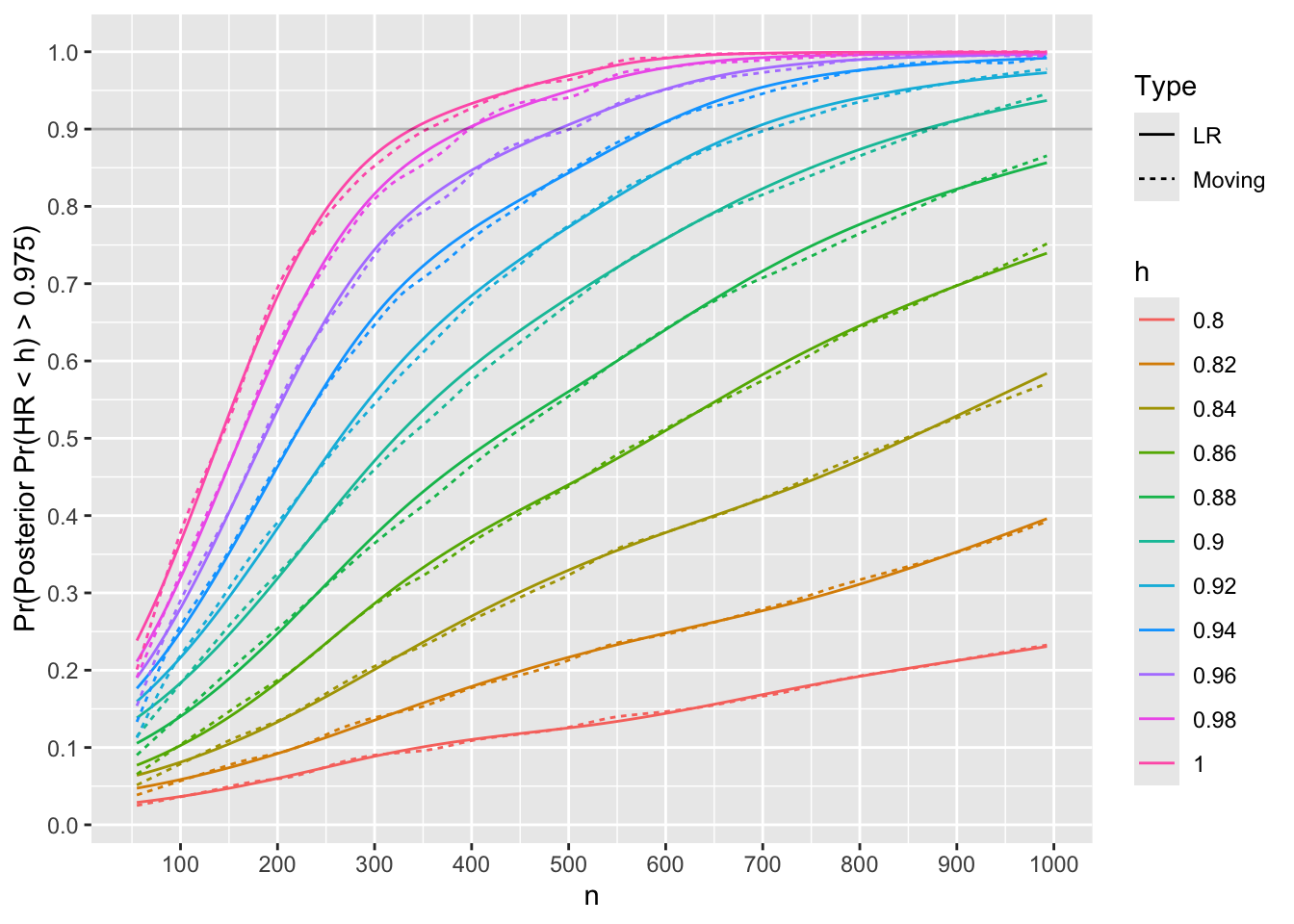

m[Type == 'LR', lookup(n, reject)][1] 333For each trial simulated with HR=0.75, compute posterior probabilities that the true HR \(< h\) for various HR cutoffs \(h\). Both moving proportions and logistic regression with a restricted cubic spline in \(n\) are used to smooth the results to estimate the relationship between \(n\) and Bayesian power, i.e., the probability that the posterior probability reaches 0.975 or higher.

Code

R <- S[HR == 0.75]

hs <- seq(0.8, 1.0, by = 0.02)

pp <- function(mu, v) list(h=hs, p=pnorm((log(hs) - mu) / sqrt(v)))

R <- R[, pp(mu, v), by=.(n, rep)]

# Compute moving proportion with posterior prob > 0.975

# with overlapping windows on n, and separately by h

R[, phi := 1 * (p >= 0.975)]

m <- movStats(phi ~ n + h, eps=100, lrm=TRUE, lrm_args=list(maxit=30),

data=R, melt=TRUE)

ggplot(m,

aes(x=n, y=phi, color=h, linetype=Type)) + geom_line() +

geom_hline(yintercept=0.9, alpha=0.3) +

scale_x_continuous(breaks=seq(0, 1000, by=100)) +

scale_y_continuous(breaks=seq(0, 1, by=0.1),

minor_breaks=seq(0, 1, by=0.05)) +

ylab('Pr(Posterior Pr(HR < h) > 0.975)')

Use linear interpolation to compute the \(n\) for which Bayesian power is 0.9.

Code

u <- m[Type == 'LR', .(nmin=lookup(n, phi)), by=h]

u h nmin

<char> <num>

1: 0.8 NA

2: 0.82 NA

3: 0.84 NA

4: 0.86 NA

5: 0.88 NA

6: 0.9 866

7: 0.92 687

8: 0.94 584

9: 0.96 489

10: 0.98 394

11: 1 339Code

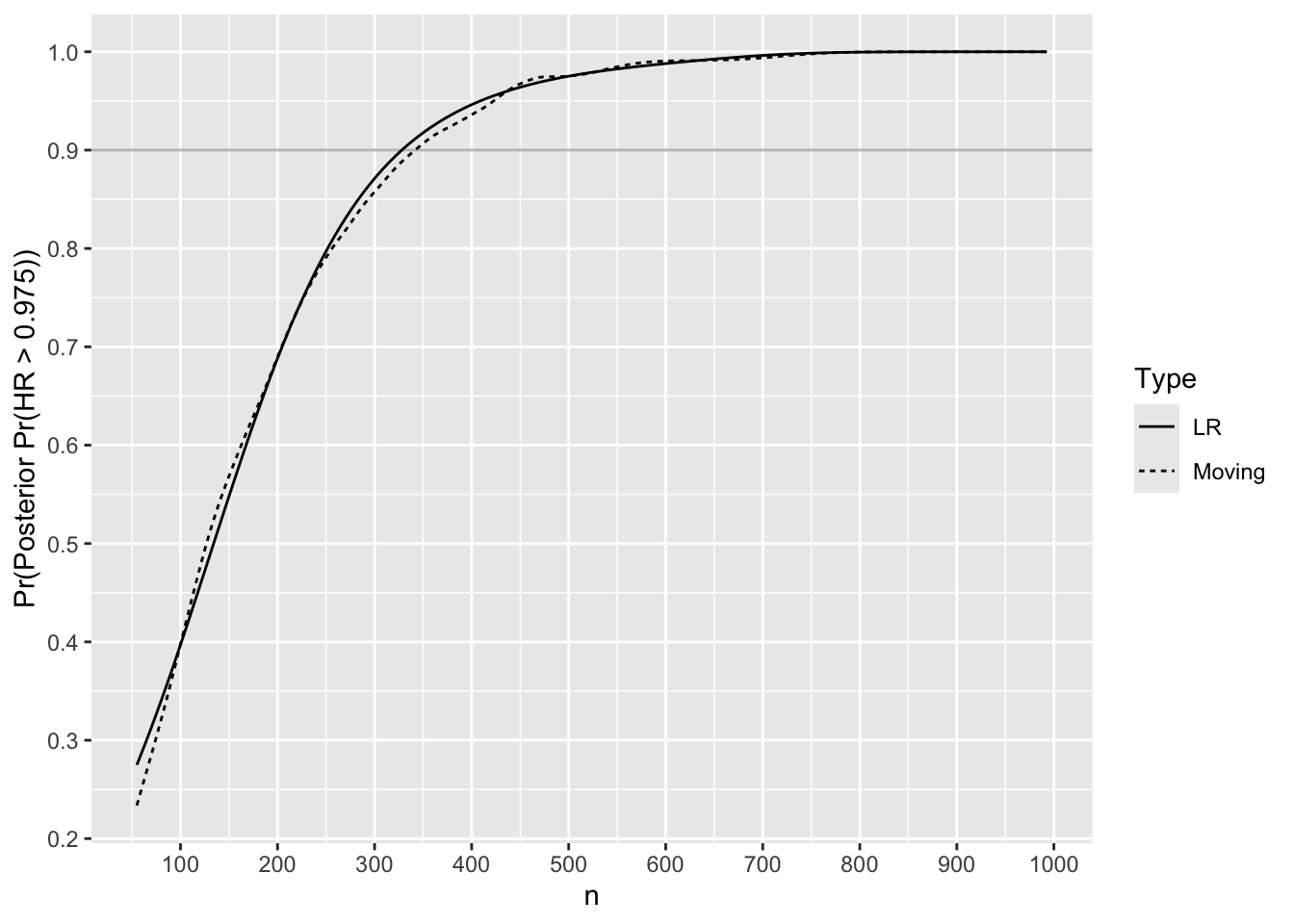

Nprime <- u[h == 1, nmin]Check the Bayesian power to detect a trivial or worse treatment benefit when true HR is 1.25.

Code

HR <- 1.25

hs <- 0.95

R <- S[HR == 1.25, pp(mu, v), by=.(n, rep)]

# One minus because we want Pr(HR > 0.95)

R[, phi := 1 * (1.0 - p > 0.975)]

m <- movStats(phi ~ n, eps=100, lrm=TRUE, data=R, melt=TRUE,

lrm_args=list(maxit=30))

ggplot(m, aes(x=n, y=phi, linetype=Type)) + geom_line() +

ylab('Pr(Posterior Pr(HR > 0.975))') +

geom_hline(yintercept=0.9, alpha=0.3) +

scale_x_continuous(breaks=seq(0, 1000, by=100)) +

scale_y_continuous(breaks=seq(0, 1, by=0.1),

minor_breaks=seq(0, 1, by=0.05))

Find \(n\) where it crosses 0.9.

Code

m[Type == 'LR', lookup(n, phi)][1] 328Accounting for Uncertainty in MCID

Different reviewers and journal readers may have different ideas of a minimal clinically important difference to detect. Instead of setting the MCID to HR=0.75, suppose that all HR in the interval [0.65, 0.85] are equally likely to be the MCID at the time the study findings are made known. Let’s re-simulate 1000 trials to compute Bayesian power at a sample size of 339 per group, varying the MCID over [0.65, 0.85].

Code

set.seed(7)

R <- expand.grid(n = Nprime, HR=runif(1000, 0.65, 0.85))

setDT(R) # convert to data.table

S <- R[, sim1(n, 3, HR, 0.15, 1.5), by=.(n, HR)]

pp <- function(mu, v) pnorm((log(1.0) - mu) / sqrt(v))

bp <- S[, mean(pp(mu, v) > 0.975)]

bp[1] 0.848The Bayesian power drops from 0.9 to 0.848 when the stated amount of uncertainty is taken into account for MCID.

9.7.3 Bayesian Power for 3-Level Ordinal Outcome

Suppose that the above time-to-event outcome is primary, and that there is a secondary outcome of infection within 90 days of randomization. To explicitly account for deaths within the 90-day window we use a 3-level proportional odds ordinal logistic model with 0=alive and infection-free, 1=alive and infection, 2=dead (whether or not preceded by infection). For the actual study we may use a partial proportional odds model to allow for a different treatment effect for the union of infection and death as compared to the effect for death alone. A prior distribution can be specified for the ratio of the two odds ratios (for infection + death and for death) such that there is a 0.95 chance that the ratio of odds ratios is between \(\frac{1}{3}\) and \(3\). A Bayesian power simulation with that amount of borrowing between the treatment effect for the two outcomes is presented at the end of this section.

Use the Weibull model described above to estimate 90-day mortality.

Code

rnd <- function(x) round(x, 3)

S <- function(t) exp(- 0.9357 * t ^ 0.5224)

m90 <- 1. - S(90 / 365.25)

m30 <- 1. - S(30 / 365.25)The estimated mortality by 90d is 0.362. The estimated probability of infection for 90-day survivors is 0.2, so the ordinal outcome is expected to have relative frequencies listed in the first line below.

Code

# Hmisc posamsize and popower function cell probabilities are averaged

# over the two groups, so need to go up by the square root of the odds

# ratio to estimate these averages

phigh <- c(1. - 0.2 - m90, 0.2, m90)

or <- 0.65

pmid <- pomodm(p=phigh, odds.ratio=sqrt(or))

plow <- pomodm(p=pmid, odds.ratio=sqrt(or))

phigh <- rnd(phigh)

pmid <- rnd(pmid)

plow <- rnd(plow)

n <- round(posamsize(pmid, odds.ratio=or, power=0.8)$n / 2)

Infpow <- round(popower(pmid, odds.ratio=or, n=2 * Nprime)$power, 3)

Infpow[1] 0.844| Treatment | Y=0 | Y=1 | Y=2 |

|---|---|---|---|

| Standard Tx | 0.438 | 0.2 | 0.362 |

| New Tx | 0.545 | 0.185 | 0.27 |

| Average | 0.491 | 0.195 | 0.314 |

The second line in the table are the outcome probabilities when an odds ratio of 0.65 is applied to the standard treatment probabilities. The last line is what is given to the posampsize and popower functions to compute the sample size need for an \(\alpha=0.05\) two-sided Wilcoxon/proportional odds test for differences in the two groups on the 3-level outcome severity with power of 0.8. The needed sample size is 301 per group were a frequentist test to be used. The frequentist power for the sample size (339 per group) that will be used for the primary outcome is 0.844.

For Bayesian power we use a process similar to what was used for the proportional hazards model above, but not quite trusting the quadratic approximation to the log likelihood for the proportional odds model, due to discretenesss of the outcome variable. Before simulating a power curve for all sample sizes using the normal approximation to the data likelihood, we do a full Bayesian simulation of 1000 trials with 300 patients per group in addition to using the approximate normal prior/normal likelihood method.

Here is a function to simulate one study with an ordinal outcome.

Code

require(rmsb)

options(mc.cores=4, rmsb.backend='cmdstan')

cmdstanr::set_cmdstan_path(cmdstan.loc)

sim1 <- function(n, pcontrol, or, orcut=1, bayes=FALSE) {

# n: number of pts in each group

# pcontrol: vector of outcome probabilities for control group

# or: odds ratio

# orcut: compute Pr(OR < orcut)

# Compute outcome probabilities for new treatment

ptr <- pomodm(p=pcontrol, odds.ratio=or)

k <- length(pcontrol) - 1

tx <- c(rep(0, n), rep(1, n))

y <- c(sample(0 : k, n, replace=TRUE, prob=pcontrol),

sample(0 : k, n, replace=TRUE, prob=ptr) )

f <- lrm(y ~ tx)

chi <- f$stats['Model L.R.']

theta.hat <- coef(f)['tx']

s2 <- vcov(f)['tx', 'tx']

tau <- 1.9989

omega <- 1.0 / s2

v <- 1.0 / (tau + omega)

mu <- omega * theta.hat / (tau + omega)

if(! all(c(length(mu), length(v), length(chi))) == 1) stop("E")

postprob <- NULL

if(bayes) {

# Set prior on the treatment contrast

pcon <- list(sd=0.7073, c1=list(tx=1), c2=list(tx=0),

contrast=expression(c1 - c2) )

f <- blrm(y ~ tx, pcontrast=pcon,

loo=FALSE, method='sampling', iter=1000)

dr <- f$draws[, 'tx']

postprob <- mean(dr < log(orcut)) # Pr(beta<log(orcut)) = Pr(OR<orcut)

}

c(mu = as.vector(mu),

v = as.vector(v),

pval = as.vector(1.0 - pchisq(chi, 1)),

postprob = postprob)

}

# Define a function that can be run on split simulations on

# different cores

g <- function() {

nsim <- 1000

# Function to do simultions on one core

run1 <- function(reps, showprogress, core) {

R <- matrix(NA, nrow=reps, ncol=4,

dimnames=list(NULL, c('mu', 'v', 'pval', 'postprob')))

for(i in 1 : reps) {

showprogress(i, reps, core)

R[i, ] <- sim1(300, phigh, 0.65, bayes=TRUE)

}

list(R=R)

}

# Run cores in parallel and combine results

# 3 cores x 4 MCMC chains = 12 cores = machine maximum

runParallel(run1, reps=nsim, seed=1, along=1, cores=3)

}

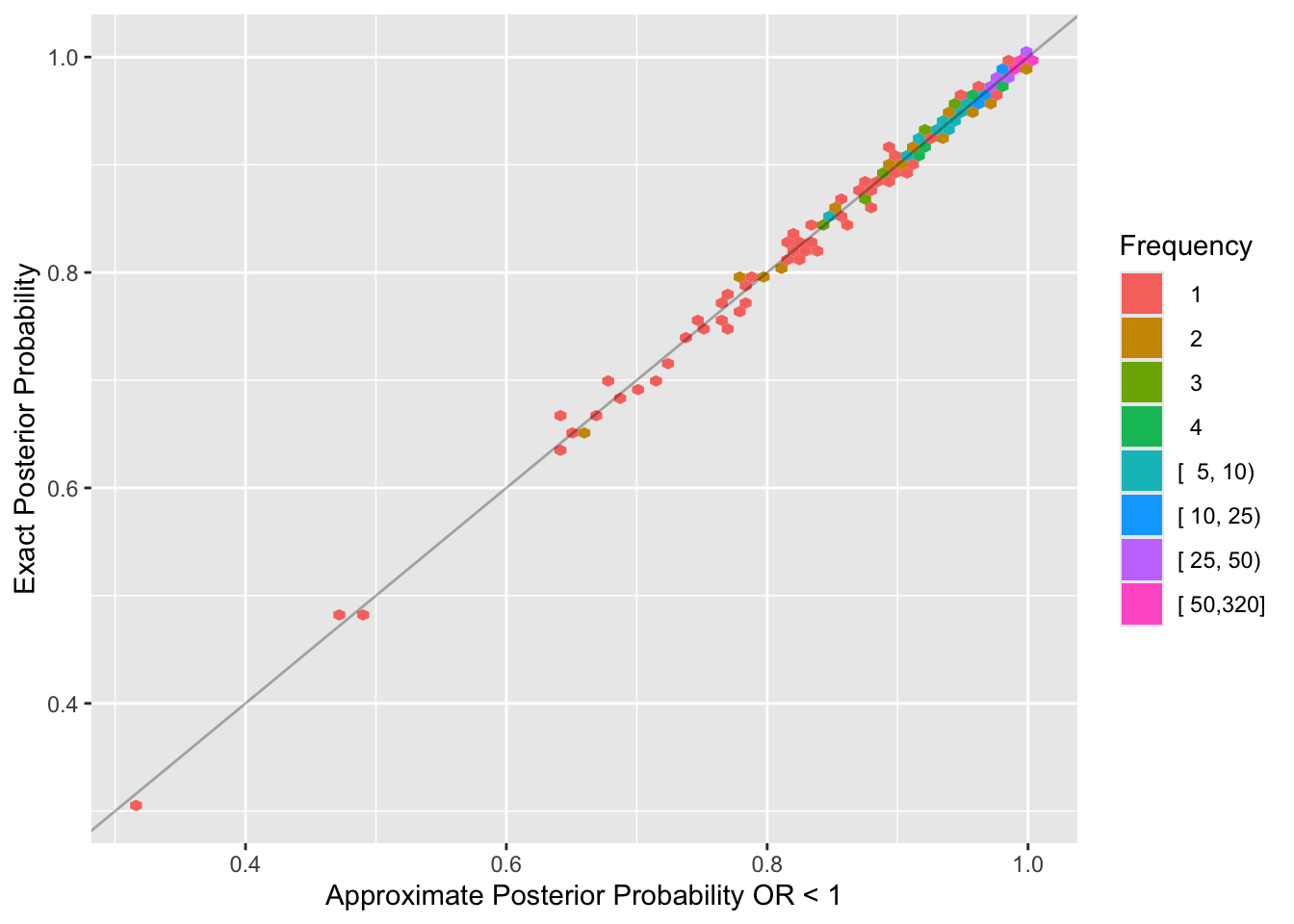

R <- runifChanged(g, file='k3.rds') # 15 min.Compute frequentist power and exact (to within simulation error) and approximate Bayesian powper.

Code

u <- as.data.table(R)

pp <- function(mu, v) pnorm((log(1.0) - mu) / sqrt(v))

u[, approxbayes := pp(mu, v)]

cnt <- c(1:5, 10, 25, 50)

ggplot(u, aes(x=approxbayes, y=postprob)) +

stat_binhex(aes(fill=cut2(after_stat(count), cnt)), bins=75) +

geom_abline(alpha=0.3) +

guides(fill=guide_legend(title='Frequency')) +

xlab('Approximate Posterior Probability OR < 1') +

ylab('Exact Posterior Probability')

Code

h <- function(x) c(Power = round(mean(x), 3))

u[, rbind('Frequentist' = h(pval < 0.05),

'Bayesian' = h(postprob > 0.975),

'Approximate Bayesian' = h(approxbayes > 0.975))] Power

Frequentist 0.795

Bayesian 0.782

Approximate Bayesian 0.780The posterior distribution is extremely well approximated by a normal distribution at a sample size that matters. Bayesian power is just slightly below frequentist power because frequentist test statistics do not place limits on the treatment effect, e.g., allow the odds ratio to be unrealistically far from 1.0.

Use the approximation for proportional odds model Bayesian power going forward. Compute power for the primary endpoint’s sample size.

Code

R <- expand.grid(n = Nprime, rep=1:1000)

setDT(R)

g <- function() {

set.seed(17)

R[, as.list(sim1(n, phigh, or, bayes=FALSE)), by=.(n, rep)]

}

S <- runifChanged(g, R, or, sim1, file='k3n.rds')

S[, pphi := 1 * (pp(mu, v) > 0.975), by=.(n, rep)]

Infpower <- S[, mean(pphi)]

Infpower[1] 0.811The Bayesian power to detect an OR of 0.65 at a sample size of 339 per group is 0.811.

Bayesian Power for Different Treatment Effect for Infection and Death

The proportional odds (PO) model can provide a correct assessment of which treatment is better overall even when PO is not satisfied. But with non-PO the power will not be optimal, and estimates of outcome probabilities for individual outcomes can be off. To estimate Bayesian power when PO is not assumed, allow the treatment effect (OR) for I|D (infection unioned (“or”d) with death) to differ from the OR for D. In the \(Y=0,1,2\) notation we have one OR for \(Y\geq 1\) and a separate OR for \(Y=2\). This is the partial PO model of Peterson & Harrell (1990). The amount of borrowing for the I|D treatment effect and the D effect is specified by a prior for the ratio of the I|D and D ORs. We assume the log of the ratio of the ORs is normal with mean zero and variance such that the probability that the ratio of ratios is between \(\frac{1}{3}\) and \(3\) is 0.95. The standard deviation accomplishing this is \(\frac{\log(3)}{1.96} = 0.5605\).

Also simulate the power of the treatment comparison on I|D when there is no borrowing of treatment effect information across I and D, i.e., when the prior for the non-PO effect (here the special effect of treatment on D) is non-informative. This partial PO model assumes nothing about how the treatment effect on I|D compares to the effect on D.

The sample size is 302 per group to yield Bayesian power of 0.8 against OR=0.65 in PO holds. For this sample size, simulations showed the loss of power by not assuming PO was minimal when the data satisfied PO. So the simulations are run with \(n=75\) per group to better understand power implications for varying amounts of information borrowing.

The R rmsb package’s blrm function has the user specify priors for departures from PO through contrasts and through a “departure from PO” function (the cppo argument). The contrast for the differential treatment effect over outcome components in the information-borrowing case is the same one that we apply to the main treatment effect except for using a standard deviation involving \(\log(3)\) instead of \(\log(4)\).

When the statistical analysis plan for the study is detailed, more simulations will be run that use a non-PO data generating mechanism, but for now data will be simulated the same as earlier, assuming PO for treatment.

Code

sim1npo <- function(n, pcontrol, or, orcut=1, peffect=FALSE) {

# n: number of pts in each group

# pcontrol: vector of outcome probabilities for control group

# or: odds ratio to detect for I|D

# orcut: Compute Pr(OR < orcut)

# Set peffect=TRUE to debug

# Compute outcome probabilities for new treatment

ptr <- pomodm(p=pcontrol, odds.ratio=or)

k <- length(pcontrol) - 1

tx <- c(rep(0, n), rep(1, n))

y <- c(sample(0 : k, n, replace=TRUE, prob=pcontrol),

sample(0 : k, n, replace=TRUE, prob=ptr) )

# Set prior on the treatment contrast

pcon <- list(sd=0.7073, c1=list(tx=1), c2=list(tx=0),

contrast=expression(c1 - c2) )

# PO model first

f <- blrm(y ~ tx, pcontrast=pcon,

loo=FALSE, method='sampling', iter=1000)

g <- blrm(y ~ tx,

y ~ tx, cppo=function(y) y == 2,

pcontrast=pcon,

loo=FALSE, method='sampling', iter=1000)

# Set prior on the "treatment by Y interaction" contrast

npcon <- pcon

npcon$sd <- 0.5605

h <- blrm(y ~ tx,

y ~ tx, cppo=function(y) y == 2,

pcontrast=pcon, npcontrast=npcon,

loo=FALSE, method='sampling', iter=1000)

if(peffect) {

print(f)

print(g)

print(h)

print(rbind(po=c(coef(f), NA), flat=coef(g), skeptical=coef(h)))

return()

}

df <- f$draws[, 'tx'] # log OR for I|D

dg <- g$draws[, 'tx'] # = log OR for D for PO

dh <- h$draws[, 'tx']

# Pr(beta < 0) = Pr(OR < 1)

lor <- log(orcut)

c(po = mean(df < lor), flat = mean(dg < lor), skeptical = mean(dh < lor))

}

g <- function() {

nsim <- 1000

# Function to do simultions on one core

run1 <- function(reps, showprogress, core) {

R <- matrix(NA, nrow=reps, ncol=3,

dimnames=list(NULL, c('po', 'flat', 'skeptical')))

for(i in 1 : reps) {

showprogress(i, reps, core)

R[i,] <- sim1npo(N, phigh, 0.65)

}

list(R=R)

}

# Run cores in parallel and combine results

# 3 cores x 4 MCMC chains = 12 cores = machine maximum

runParallel(run1, reps=nsim, seed=11, cores=3, along=1)

}

N <- 75

R <- runifChanged(g, sim1, N, file='k3npo.rds')

bp <- apply(R, 2, function(x) mean(x >= 0.975))

bp po flat skeptical

0.228 0.203 0.208 For \(n=75\) per group, the Bayesian power is 0.228 when completely borrowing information from D to help inform the treatment effect estimate for D|I. Allowing for arbitrarily different effects (non-PO) reduces the power to 0.203, and assuming some similarity of effects yields a power of 0.208.

9.7.4 Bayesian Power for 6-Level Ordinal Non-Inferiority Outcome

Suppose that the 6-level ECOG performance status assessed at 30 days is another secondary endpoint, and is considered to be a non-inferiority endpoint. Assessment of non-inferiority is more direct with a Bayesian approach and doesn’t need to be as conservative as a frequentist analysis, as we are assessing evidence for a broader range of treatment effects that includes slight harm in addition to the wide range of benefits.

The outcome will be analyzed using a Bayesian proportional odds model with the same prior on the log odds ratio as has been used above. The 6 cell probabilities assumed for the control group appear in the top line in the table below.

The estimated mortality by 30d is 0.224.

Code

# Start with relative probabilities of Y=0-4 and make them

# sum to one minus 30d mortality

phigh <- c(42, 41, 10, 1, 1) / 100

phigh <- c(phigh / sum(phigh) * (1. - m30), m30)

or <- 0.65

pmid <- pomodm(p=phigh, odds.ratio=sqrt(or))

plow <- pomodm(p=pmid, odds.ratio=sqrt(or))

n <- round(posamsize(pmid, odds.ratio=or, power=0.8)$n / 2)

Perfpowf <- popower(pmid, odds.ratio=or, n = Nprime * 2)$power

g <- function(x) {

names(x) <- paste0('Y=', (1 : length(x)) - 1)

round(x, 3)

}

rbind('Standard treatment' = g(phigh),

'New treatment' = g(plow),

'Average' = g(pmid) ) Y=0 Y=1 Y=2 Y=3 Y=4 Y=5

Standard treatment 0.343 0.335 0.082 0.008 0.008 0.224

New treatment 0.446 0.319 0.065 0.006 0.006 0.158

Average 0.393 0.330 0.074 0.007 0.007 0.189The second line in the table are the outcome probabilities when an odds ratio of 0.65 is applied to the standard treatment probabilities. The last line is what is given to the posampsize function to compute the sample size need for an \(\alpha=0.05\) two-sided Wilcoxon / proportional odds test for differences in the two groups on the 6-level outcome severity with power of 0.8. This is also used for popower. The needed sample size is 283 per group were a frequentist test to be used. This is for a superiority design. The frequentist power for 339 per group is 0.864524.

For performance status a non-inferiority design is used, so Bayesian power will differ more from frequentist superiority power, as the Bayesian posterior probability of interest is \(P(\text{OR} < 1.2)\). Simulate Bayesian power using the normal approximation.

Code

g <- function() {

set.seed(14)

or <- 0.65

R <- expand.grid(n = Nprime, rep=1:1000)

setDT(R)

R[, as.list(sim1(n, phigh, or, orcut=1.2, bayes=FALSE)), by=.(n, rep)]

}

S <- runifChanged(g, sim1, file='k6.rds') # 16s

pp <- function(mu, v) pnorm((log(1.2) - mu) / sqrt(v))

S[, pphi := 1 * (pp(mu, v) > 0.975), by=.(n, rep)]

Perfpowb <- S[, mean(pphi)]Bayesian power to detect an OR of 0.65 when seeking evidence for OR < 1.2 with 339 patients per group is 0.987.

9.8 Sample Size Needed For Prior Insensitivity

One way to choose a target sample size is to solve for \(n\) that controls the maximum absolute difference in posterior probabilities of interest, over the spectrum of possible-to-observe data. Let

- \(n\) = sample size in each of two treatment groups

- \(\Delta\) = unknown treatment effect expressed as a difference in means \(\mu_{1} - \mu_{2}\)

- the data have a normal distribution with variance 1.0

- \(\hat{\Delta}\) = observed difference in sample means \(\overline{Y}_{1} - \overline{Y}_{2}\)

- \(V(\hat{\Delta}) = \frac{2}{n}\)

- Frequentist test statistic: \(z = \frac{\hat{\Delta}}{\sqrt{\frac{2}{n}}}\)

- \(\delta\) assumed to be the difference not to miss, for frequentist power

- One-sided frequentist power for two-sided \(\alpha\) with \(z\) critical value \(z_{\alpha}\): \(P(z > z_{\alpha}) = 1 - \Phi(z_{\alpha} - \frac{\delta}{\sqrt{\frac{2}{n}}})\)

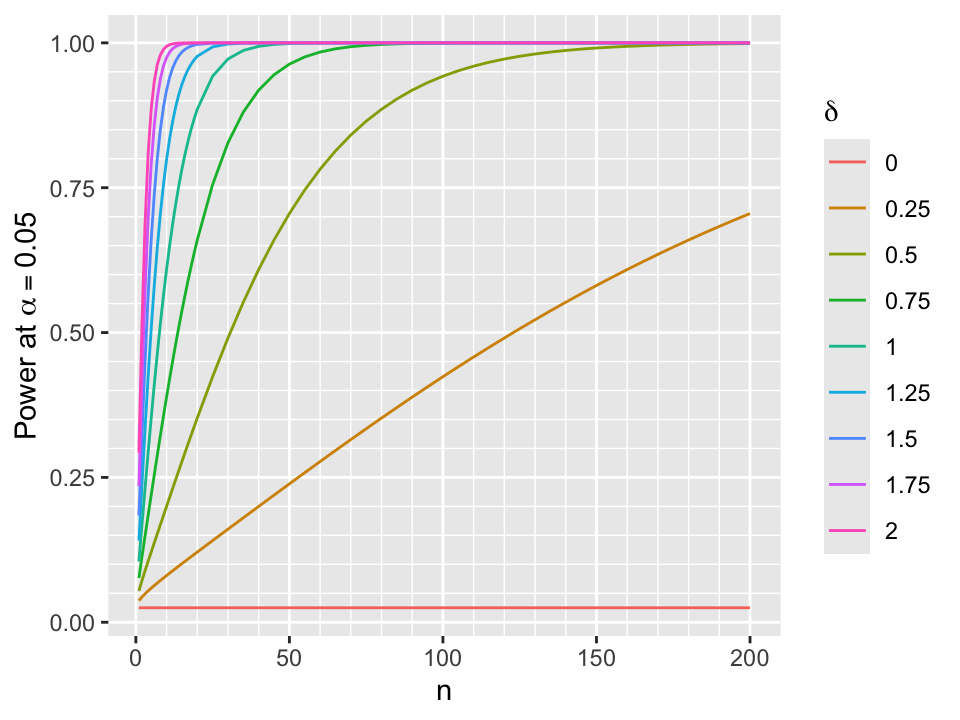

Power curves are shown below for \(\alpha=0.05\).

Code

d <- expand.grid(n=c(1:19, seq(20, 200, by=5)),

delta = seq(0, 2, by=0.25))

ggplot(d, aes(x=n, y=1 - pnorm(qnorm(0.975) - delta / sqrt(2 / n)), color=factor(delta))) +

geom_line() + guides(color=guide_legend(title=expression(delta))) +

scale_x_continuous(minor_breaks=seq(0, 200, by=10)) +

scale_y_continuous(minor_breaks=seq(0, 1, by=0.05)) +

xlab('n') + ylab(expression(paste('Power at ', alpha==0.05)))

- Assume skeptical prior for \(\Delta\) that is normal with mean 0 and variance \(\frac{1}{\tau}\)

- Posterior distribution for \(\Delta | \hat{\Delta}\) is normal

- variance = \(\frac{1}{\tau + \frac{n}{2}}\)

- mean = \(\frac{n\hat{\Delta}}{n + 2\tau}\)

Code

pp <- function(tau, n, delta, Deltahat)

round(1 - pnorm((delta - n * Deltahat / (n + 2 * tau)) * sqrt(tau + n / 2)), 4)

# Compute prior SD such that P(Delta > delta) = prob

psd <- function(delta, prob) round(delta / qnorm(1 - prob), 4)- Example: Probability of misleading evidence for efficacy

- agreed-upon prior was such that \(P(\Delta > 0.5) = 0.05 \rightarrow\) SD of the prior is 0.304

- someone disagrees, and has their own posterior probability of efficacy based on their prior, which was chosen so that \(P(\Delta > 0.5) = 0.005 \rightarrow\) SD of their prior is 0.1941

- suppose that \(\hat{\Delta}\) at \(n=100\) is 0.3

- \(\rightarrow\) posterior probability of \(\Delta > 0\) using pre-specified prior is 0.9801

- \(\rightarrow\) posterior probability of \(\Delta > 0\) using the critic’s prior is 0.9783

- there is substantial evidence for efficacy in the right direction

- probability of being wrong in acting as if the treatment works is 0.0199 to those who designed the study, and is 0.0217 to the critic

- the two will disagree more about evidence for a large effect, e.g. \(P(\Delta > 0.2)\)

- \(P(\Delta > 0.2)\) using pre-specified prior: 0.724

- \(P(\Delta > 0.2)\) for the critic: 0.7035

- Probabilities of being wrong in acting as if the treatment effect is large: 0.276, 0.2965

- Sample size needed to overcome effect of prior depends on the narrowness of the prior (\(\tau; \sigma\))

- for posterior mean and variance to be within a ratio \(r\) of those from a flat prior \(n\) must be \(> \frac{2\tau r}{1 - r}\)

- within 5% for \(n > 38\tau\)

- within 2% for \(n > 98\tau\)

- within 1% for \(n > 198\tau\)

- typical value of \(\sigma = \frac{1}{\tau} = 0.5\) so \(n > 196\) to globally be only 2% sensitive to the prior

- Posterior \(P(\Delta > \delta | \hat{\Delta}) = 1 - \Phi((\delta - \frac{n\hat{\Delta}}{n + 2\tau})\sqrt{\tau + \frac{n}{2}})\)

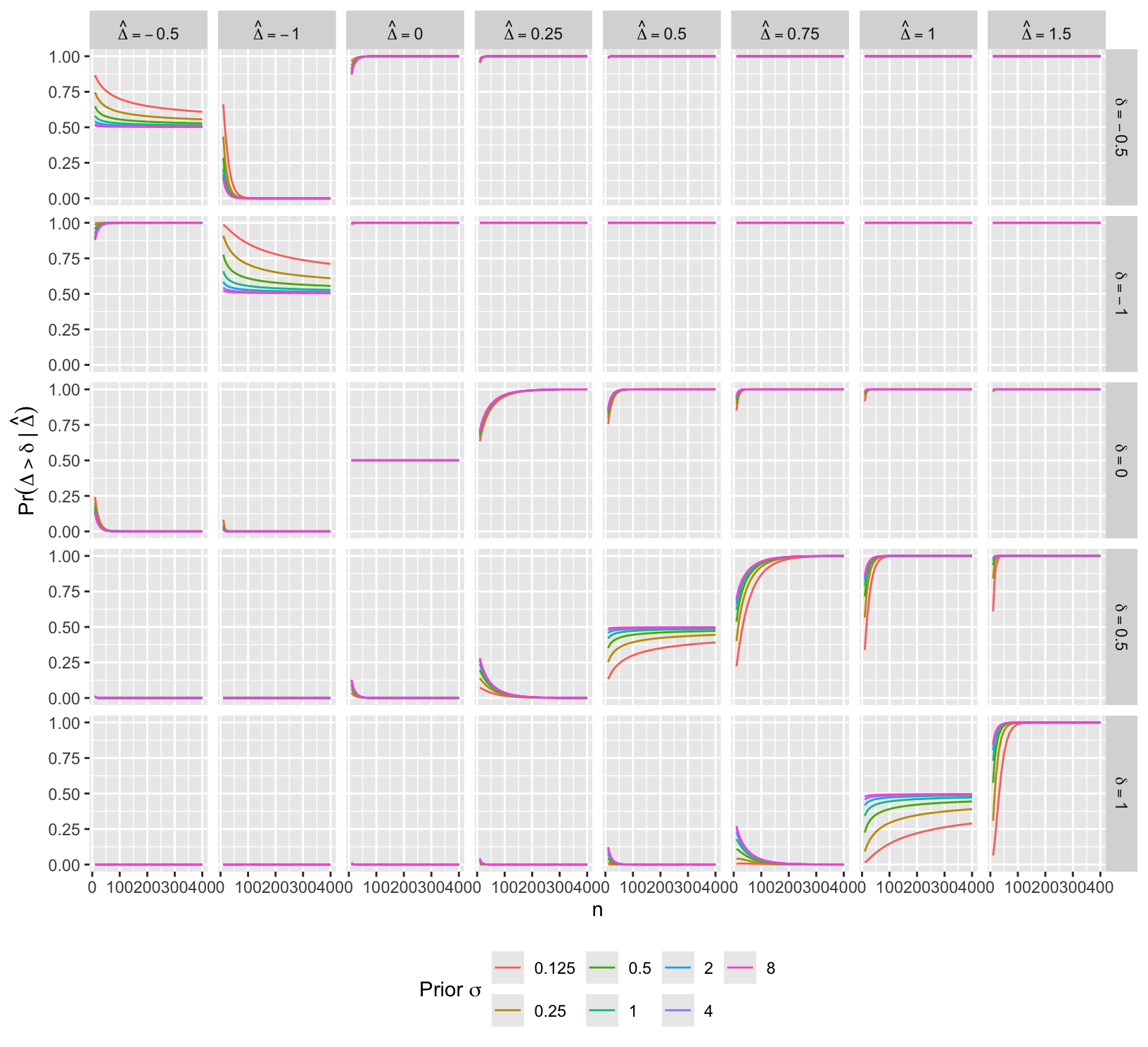

- Choose different values of the prior SD to examine sensitivity of cumulative posterior distribution to prior

- Below see that the posterior probability of any treatment benefit (when \(\delta=0\)) is not sensitive to the prior

- Probability of benefit \(> \delta\) for large \(\delta\) is sensitive to the prior

- priors having large probability of large \(\Delta\) lead to higher posterior probabilities of large \(\Delta > \delta\) when \(\delta\) is large

- For each \(n, \delta\), and SDs compute the absolute difference in posterior probabilities that \(\Delta > \delta\)

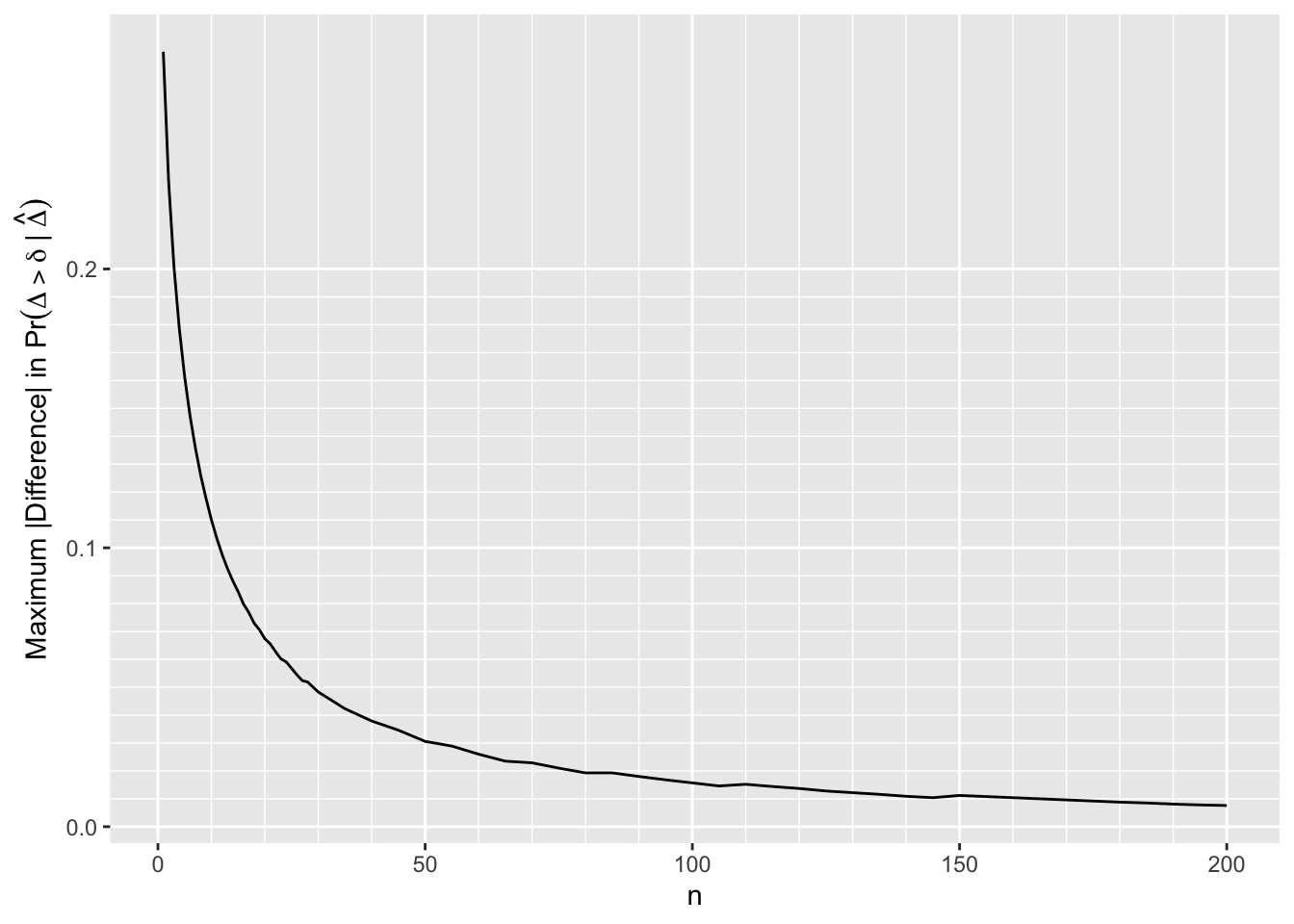

- First look at the result when assessing evidence for any efficacy (\(\delta=0\)) when the most extreme priors (\(\sigma=\frac{1}{8}\) vs. \(\sigma=8\)) are compared

- Don’t consider conditions when both posterior probabilities are in [0.1, 0.9] where disagreements are probably inconsequential

Code

require(data.table)

sigmas <- c(1/8, 8)

w <- expand.grid(n = c(1:30, seq(35, 200, by=5)),

Deltahat = seq(-1, 5, by=0.025))

setDT(w)

z <- lapply(1 : nrow(w),

function(i) w[i, .(n, Deltahat,

p1 = pp(8, n, 0, Deltahat),

p2 = pp(1/8, n, 0, Deltahat) )] )

u <- rbindlist(z)

# Ignore conditions where both posterior probabilities are in [0.1, 0.9]

# Find maximum abs difference over all Deltahat

m <- u[, .(mdif = max(abs(p1 - p2) * (p1 < 0.1 | p2 < 0.1 | p1 > 0.9 | p2 > 0.9))), by=n]

ggplot(m, aes(x=n, y=mdif)) + geom_line() +

scale_x_continuous(minor_breaks=seq(0, 200, by=10)) +

scale_y_continuous(minor_breaks=seq(0, 0.2, by=0.01)) +

ylab(expression(paste('Maximum |Difference| in ', Pr(Delta > delta ~ "|" ~ hat(Delta)))))

By \(n=170\) the largest absolute difference in posterior probabilities of any efficacy is 0.01

The difference is 0.02 by \(n=80\)

Sample sizes needed for frequentist power of 0.9 are \(n=38\) for \(\Delta=0.5\) and \(n=85\) for \(\Delta=0.25\)

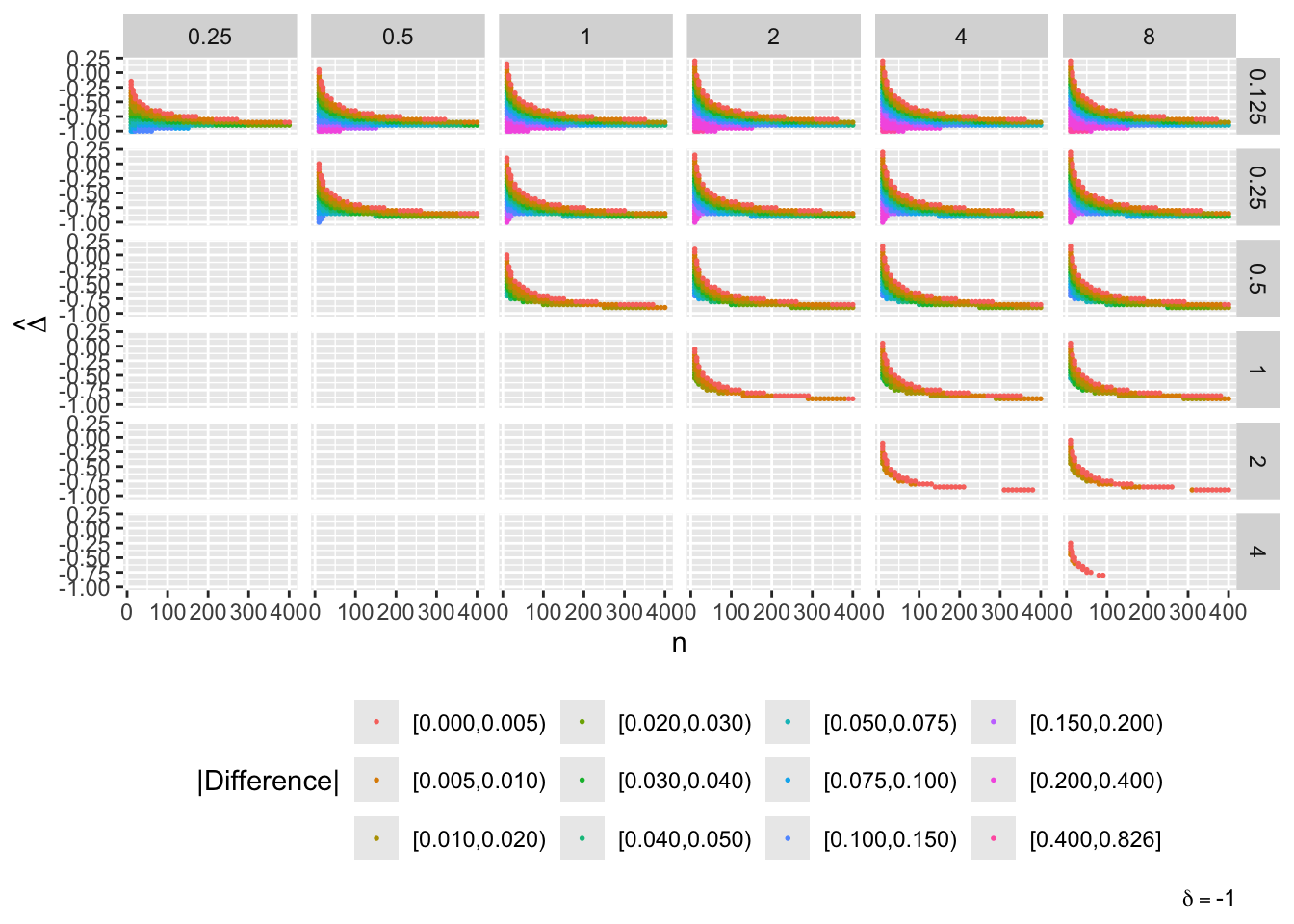

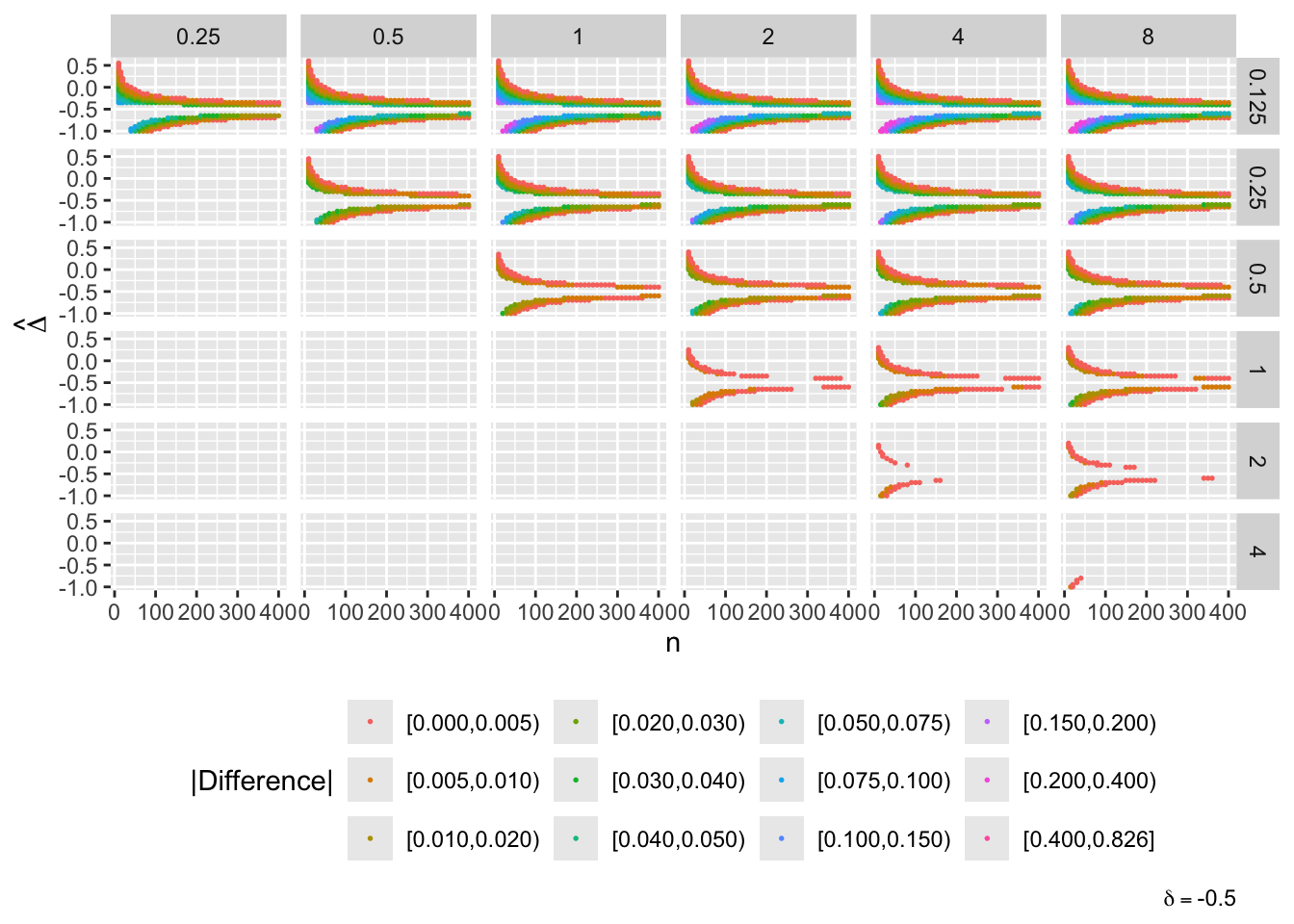

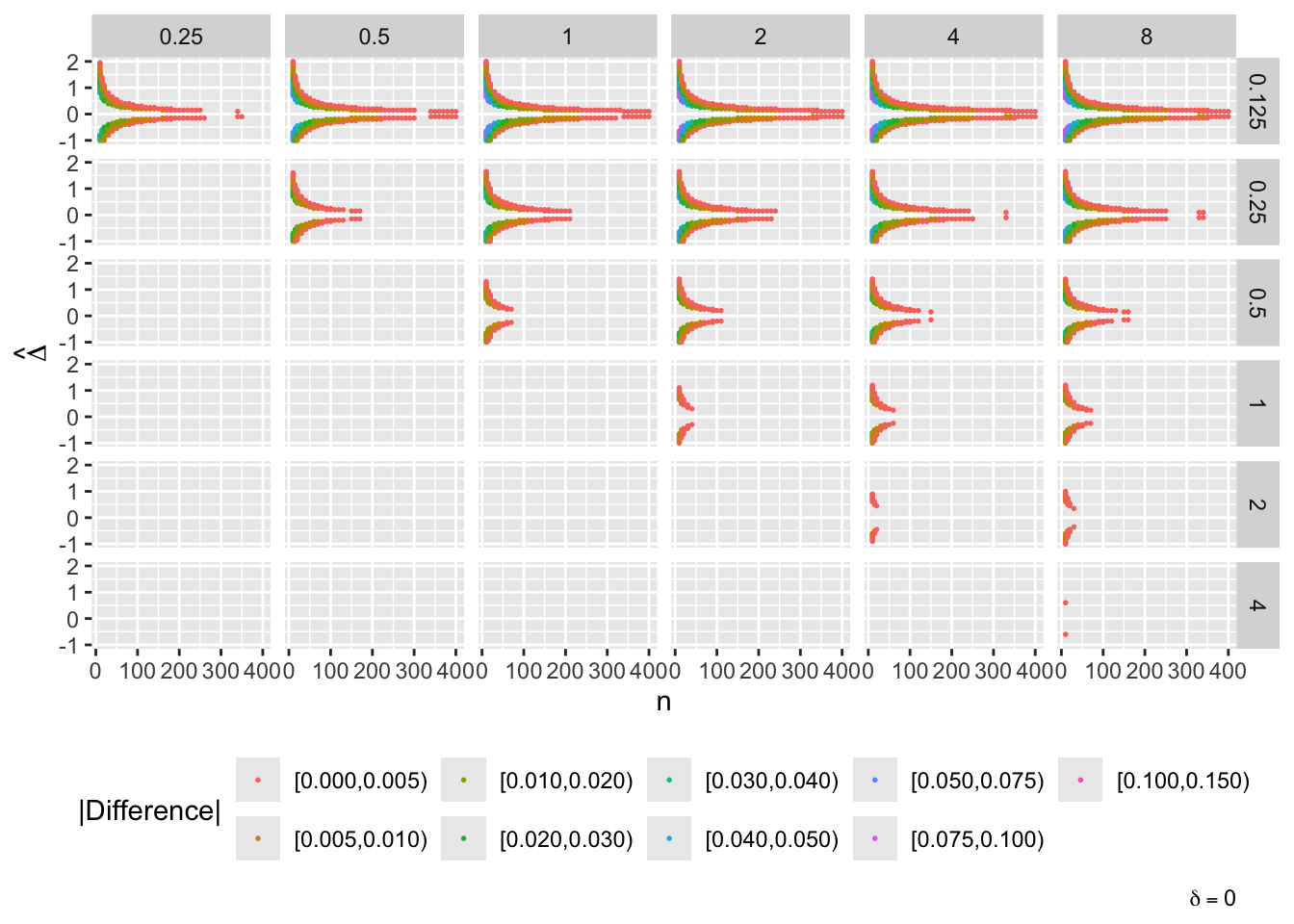

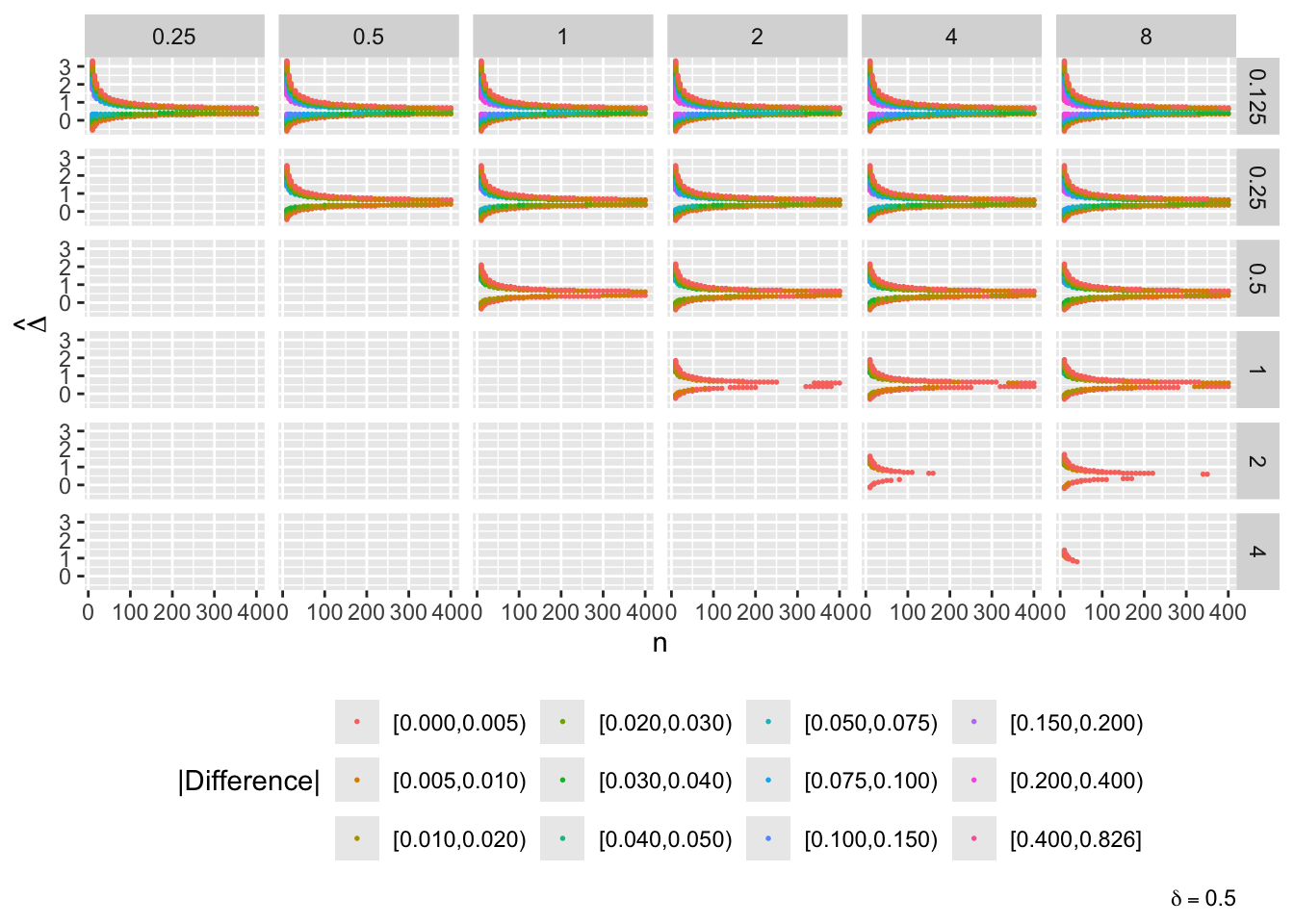

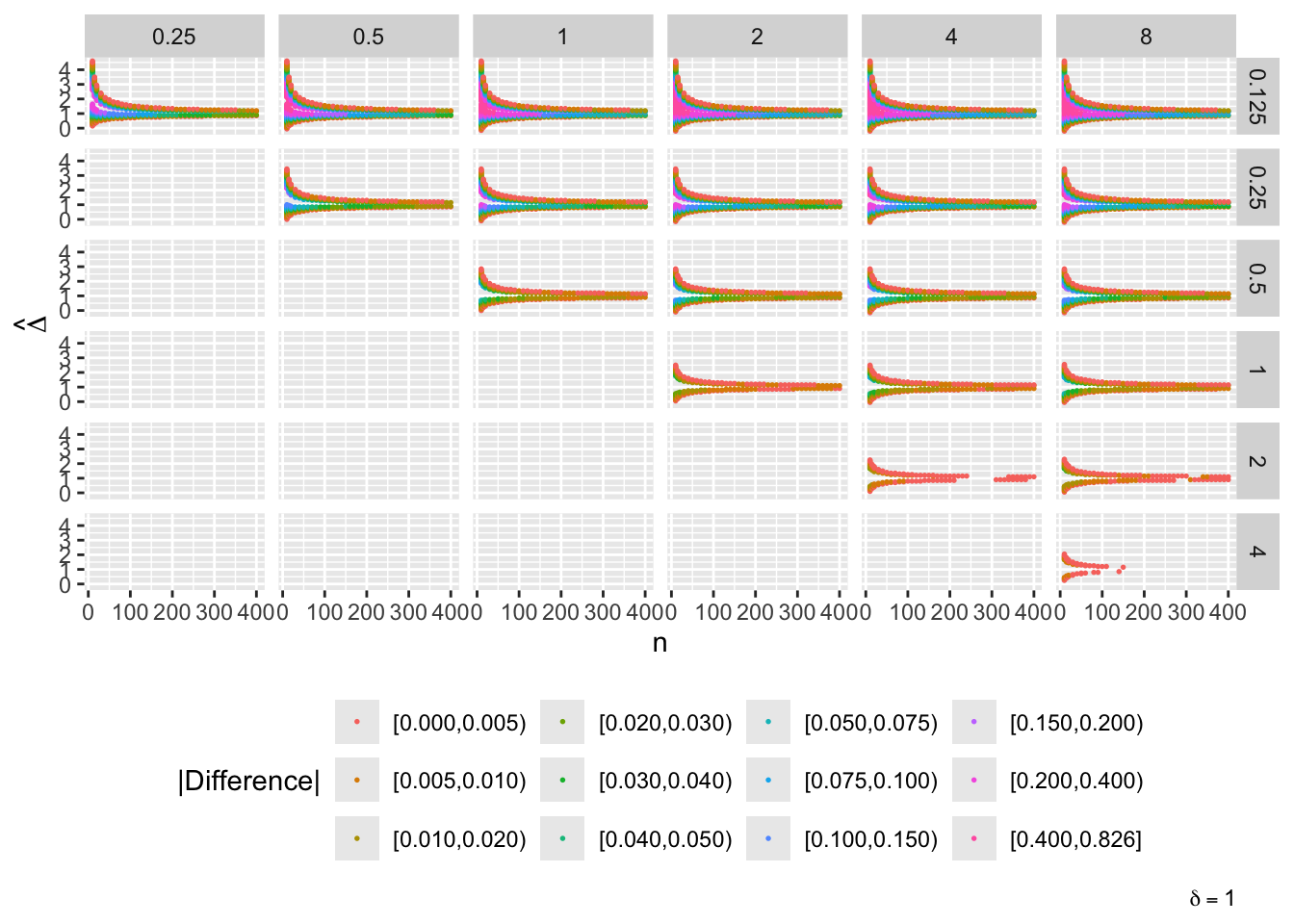

Now consider the full picture where evidence for efficacy \(> \delta\) where \(\delta \neq 0\) is included

Vary \(n, \delta, \hat{\Delta}\) and the two prior SDs

Don’t show points where the difference < 0.0025 or when both posterior probabilities are in [0.1, 0.9]

In the graph one of the prior SDs is going across, and the other is vertical; diagonals where the two SDs are equal are not needed

Code

# For a given n and delta two values of SDs compute the absolute difference

# of the two posterior probabilities that Delta > delta when one of the posterior

# probabilities was < 0.1 or > 0.9 and when the difference exceeds 0.0025

adif <- function(n, delta, s1, s2) {

tau1 <- 1 / s1

tau2 <- 1 / s2

Deltahat <- seq(-1, 5, by=0.05)

p1 <- pp(tau1, n, delta, Deltahat)

p2 <- pp(tau2, n, delta, Deltahat)

i <- abs(p1 - p2) >= 0.0025 & (pmin(p1, p2) < 0.1 | pmax(p1, p2) > 0.9)

data.table(n, delta, s1, s2, Deltahat, ad=abs(p1 - p2))[i]

}

taus <- c(1/8, 1/4, 1/2, 1, 2, 4, 8)

sigmas <- 1 / taus

dels <- seq(-1, 1, by=0.5)

w <- expand.grid(n = c(10, 15, seq(20, 400, by=10)),

delta = dels,

s1 = sigmas,

s2 = sigmas)

setDT(w)

w <- w[s2 > s1]

z <- lapply(1 : nrow(w), function(i) w[i, adif(n, delta, s1, s2)])

u <- rbindlist(z)

u[, adc := cut2(ad, c(0, 0.005, 0.01, 0.02, 0.03, 0.04, 0.05, 0.075, 0.1, 0.15, 0.2, 0.4))]

g <- vector('list', length(dels))

names(g) <- paste0('δ=', dels)

for(del in dels)

g[[paste0('δ=', del)]] <- ggplot(u[delta==del], aes(x=n, y=Deltahat, color=adc)) + geom_point(size=0.3) +

facet_grid(s1 ~ s2) + guides(color=guide_legend(title='|Difference|')) +

theme(legend.position='bottom') +

ylab(expression(hat(Delta))) +

labs(caption=bquote(delta == .(del)))

maketabs(g, initblank=TRUE)

- “Mouth” from the left of each panel corresponds to regions where both posterior probabilities are in [0.1, 0.9]

- Most extremely different priors examined had SD=0.25 (skeptical of large \(\Delta\)) vs. SD=8 (large \(\Delta\) are likely)

- For \(\delta=0\) differences vanish for \(n > 200\)

- For \(\delta=-1\) differences are greatest when observed differences in means \(\hat{\Delta} = -1\)

- For \(\delta=1\) differences are greatest when \(\hat{\Delta} = 1\)

- Required \(n=400\) for influence of prior to almost vanish everywhere when the most different priors are paired

- More realistic to compare somewhat skeptical SD=1 with skeptical SD=0.25

- Larger discrepancy when \(\delta=-1\) and \(\hat{\Delta}=-1\)

- \(n > 300\) makes for small discrepancy

Another display of the same conditions except for fewer values of \(\hat{\Delta}\).

Code

w <- expand.grid(n = c(10, 15, seq(20, 400, by=10)),

delta = seq(-1, 1, by=0.5),

s = sigmas,

Deltahat = c(-1, -0.5, 0, 0.25, 0.5, 0.75, 1, 1.5))

setDT(w)

z <- lapply(1 : nrow(w), function(i) w[i, .(n, delta, s, Deltahat, pp=pp(1/s, n, delta, Deltahat))])

u <- rbindlist(z)

ggplot(u, aes(x=n, y=pp, color=factor(s))) + geom_line() +

facet_grid(paste0('delta==', delta) ~ paste('hat(Delta)==', Deltahat), label='label_parsed') +

guides(color=guide_legend(title=expression(paste('Prior ', sigma)))) +

ylab(expression(Pr(Delta > delta ~ "|" ~ hat(Delta)))) +

theme(legend.position='bottom')

See Rigat (2023) for related ideas.

9.9 Sequential Monitoring and Futility Analysis

I want to make it quite clear that I have no objection with flexible designs where they help us avoid pursuing dead-ends. The analogous technique from the sequential design world is stopping for futility. In my opinion of all the techniques of sequential analysis, this is the most useful. — Senn (2013)

- Compute PP as often as desired, even continuously

- Formal Bayesian approach can also consider having adequate safety data

- Stop when PP > 0.95 for any efficacy and PP > 0.95 for safety event hazard ratio ≤ 1.2

- Spiegelhalter et al. (1993) argue for use of ‘range of equivalence’ in monitoring

- Bayesian design is very simple if no cap on n

- But often reach a point where clinically important efficacy unlikely to be demonstrated within a reasonable n

- Futility is more easily defined with Bayes. 3 possible choices:

- Stop when P(efficacy < ε) is > 0.9

- Use posterior predictive distribution at planned max sample size (Berry (2006))

- Pre-specify max n and continue until that n if trial not stopped early for efficacy or harm (more expensive)

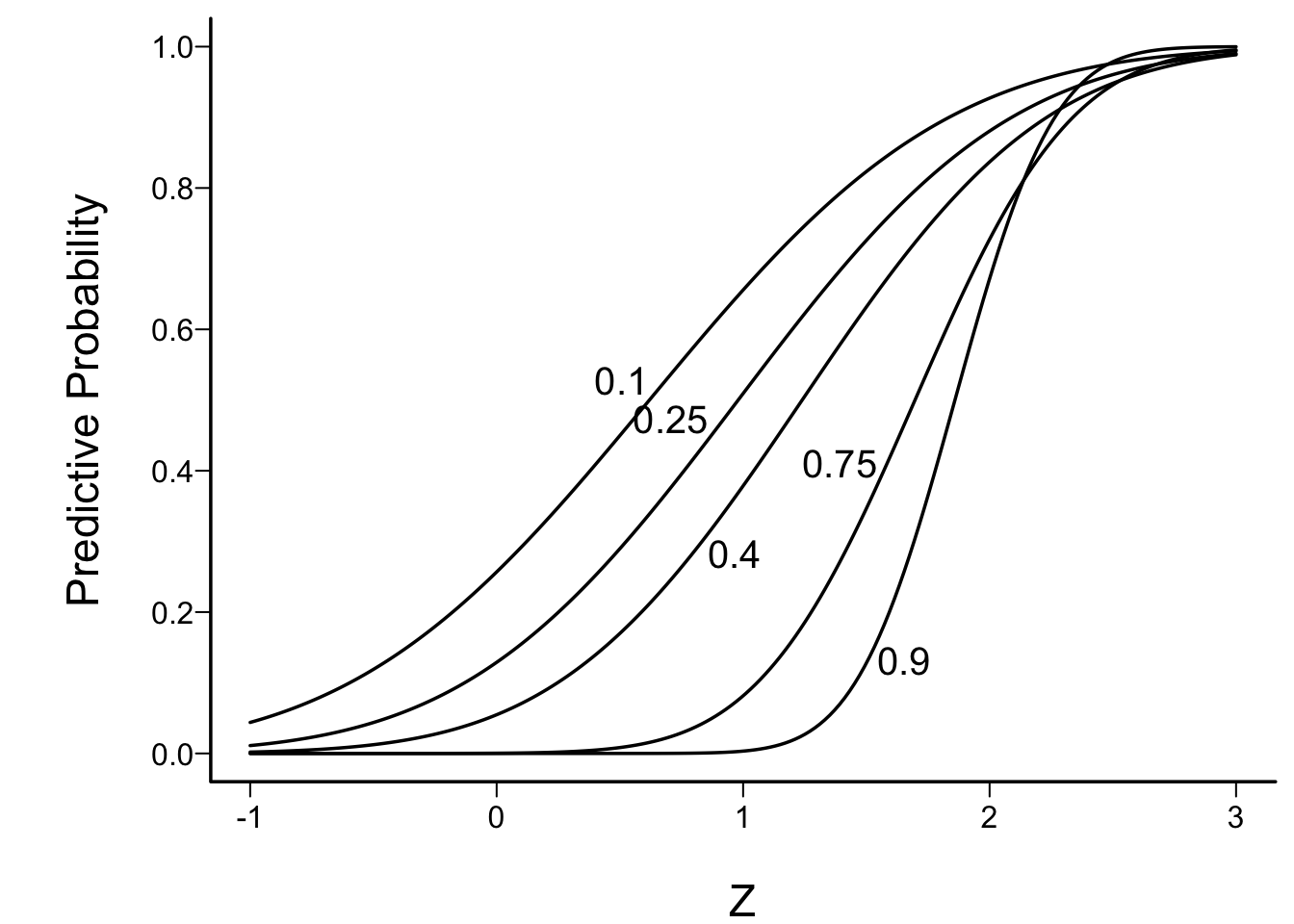

- Insightful approximation of Spiegelhalter et al. (1993)

- use Z-statistic at an interim look

- assume fraction f of subjects randomized to date

- based only on observables

Code

spar(bty='l')

pf <- function(z, f) pnorm(z/sqrt(1 - f) - 1.96 * sqrt(f) / sqrt(1 - f))

zs <- seq(-1, 3, length=200)

fs <- c(.1, .25, .4, .75, .9)

d <- expand.grid(Z=zs, f=fs)

f <- d$f

d$p <- with(d, pf(Z, f))

d$f <- NULL

p <- split(d, f)

labcurve(p, pl=TRUE, ylab='Predictive Probability')

Example: to have reasonable hope of demonstrating efficacy if an interim Z value is 1.0, one must be less than 1/10th of the way through the trial

For example simulations of futility see this

The published report of any study attempts to answer the crucial question: What does this trial add to our knowledge? The strength of the Bayesian approach is that it allows one to express the answer formally. It therefore provides a rational framework for dealing with many crucial issues in trial design, monitoring and reporting. In particular, by making explicitly different prior opinions about efficacy, and differing demands on new therapies, it may shed light on the varying attitudes to balancing the clinical duty to provide the best treatment for individual patients, against the desire to provide sufficient evidence to convince the general body of clinical practice. — Spiegelhalter et al. (1993)

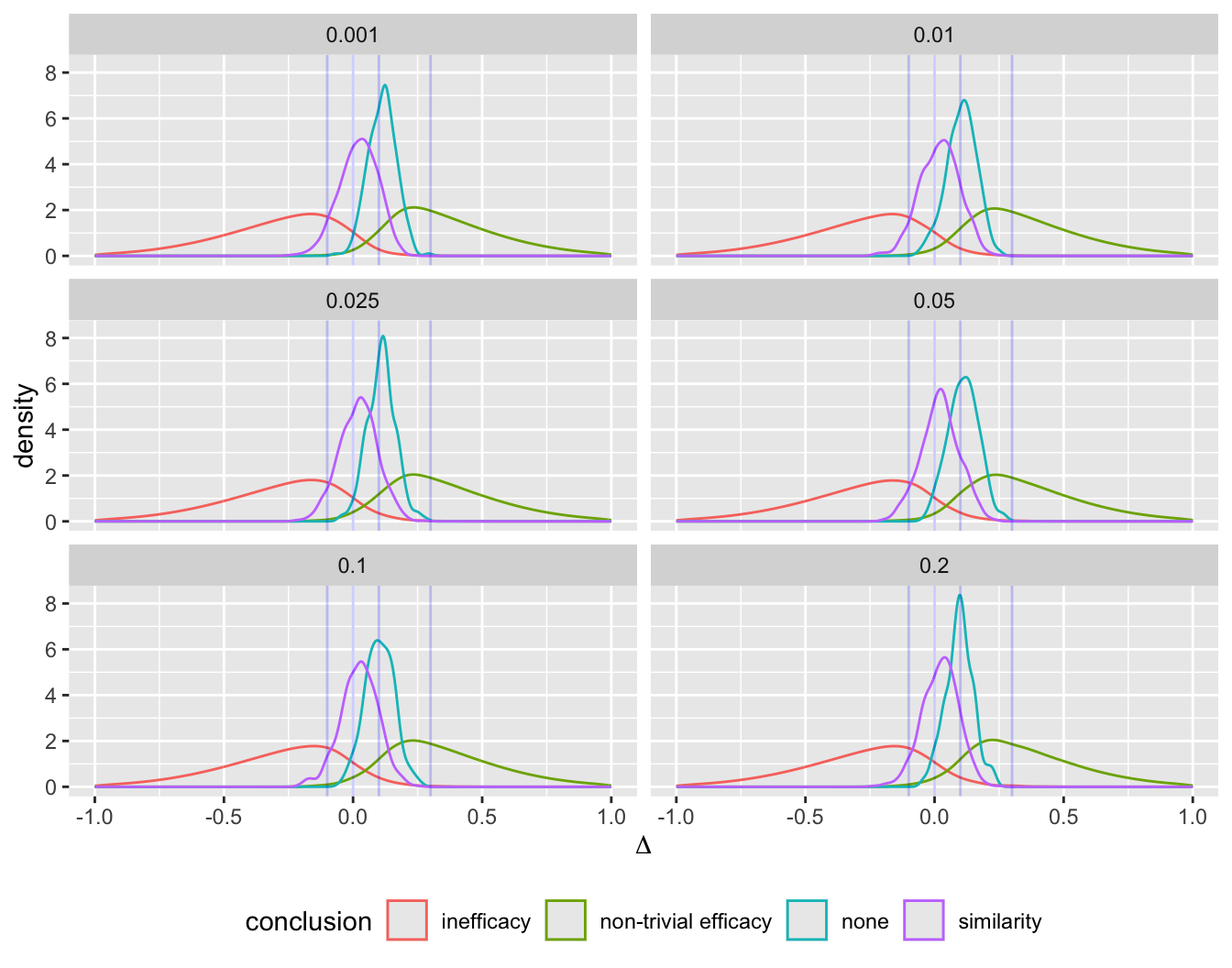

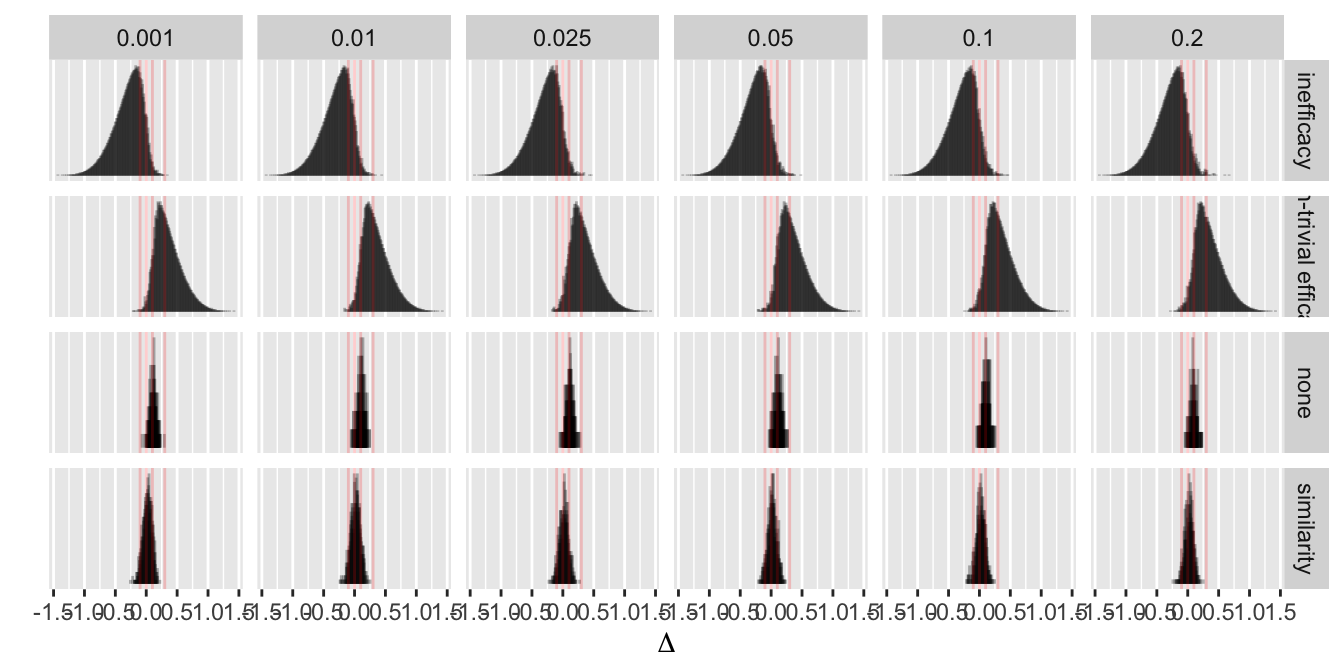

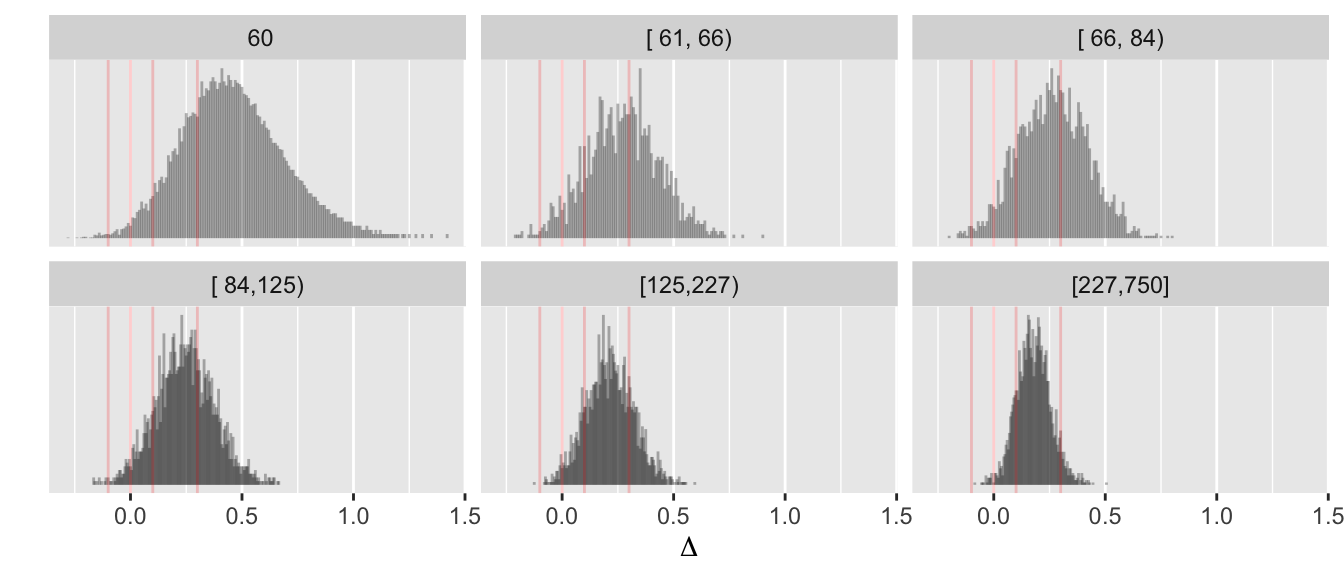

9.10 Example Operating Characteristics

Even with a disadvantageous mismatch between the prior used in the analysis and the prior used to generate the universe of efficacies, the design was able to stop early for evidence of non-trivial efficacy in 0.401 of 10,000 trials over a skeptical distribution of true efficacies, and was right 0.91 of the time when doing so. The design was right 0.98 of the time in terms of there being truly some efficacy when stopping for more than trivial efficacy. Stopping early for evidence of less than trivial efficacy was possible in 0.518 of the trials and was the correct decision 0.97 of the time. One quarter of such trials stopped with fewer than 14 participants studied and half had a sample size less than 32. Overall the average sample size at the time of stopping was 138 for the most skeptical prior, which is just over ½ what a traditional frequentist design would require (234) just to have power to detect the MCID, still not providing evidence for non-trivial efficacy, inefficacy, or similarity. With the Bayesian sequential design only 0.024 of the trials ended without a conclusion for the most skeptical prior.

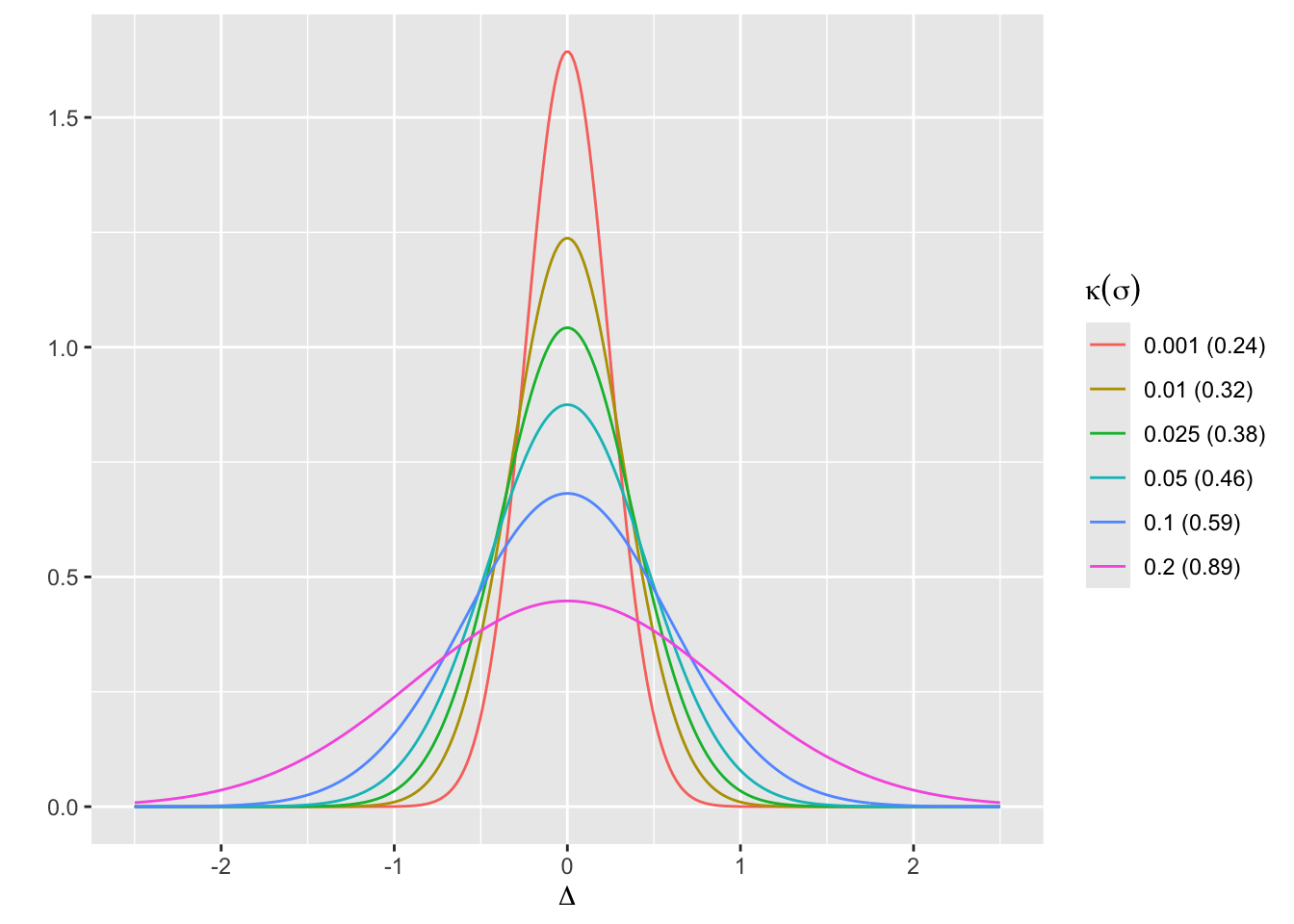

For the Bayesian sequential design at the start of this chapter, simulate the operating characteristics for the following setting.

- Two-arm parallel-group RCT with equal sample sizes per group

- Response variable is normal with standard deviation 1.0

- Estimand of interest is \(\Delta\) = the unknown difference in means

- MCID is \(\delta=0.3\)

- Minimum clinically detectable difference is \(\gamma=0.1\)

- Similarity interval is \(\Delta \in [-0.15, 0.15]\)

- Maximum affordable sample size is 750 per treatment group

- Required minimum precision in estimating \(\Delta\): \(\pm 0.4\) (half-width of 0.95 credible interval)

- \(\rightarrow\) minimum sample size \(N_{p}\) before stopping for efficacy

- Allow stopping for inefficacy at any time, even if the treatment effect cannot be estimated after stopping, i.e., one only knows there is a high probability that the effect \(< \gamma\).

- The first analysis takes place after the first participant has been enrolled and followed, then after each successive new participant’s data are complete. The first look for efficacy is delayed until the \(N_{p}^\text{th}\) participant has been followed so that the magnitude of the treatment effect has some hope of being estimated.

- Prior for \(\Delta\) is normal with mean 0 and SD \(\sigma\) such that \(P(\Delta > 0.75) = \kappa\) where \(\kappa\) is varied over \({0.2, 0.1, 0.05, 0.025}\)

- This corresponds to \(\sigma=\) 0.8911, 0.5852, 0.456, 0.3827

- These are skeptical priors, chosen because the treatment is assumed to be an incremental rather than a curative therapy

The assumed data model leads to a frequentist point estimate of \(\Delta\) = \(\hat{\Delta}\) = difference in two means, with variance \(\frac{2}{n}\), where \(n\) is the per-group sample size. The one-sided frequentist power for a two-tailed test with \(\alpha=0.05\) is \(1 - \Phi(1.96 - \frac{0.3}{\sqrt{\frac{2}{n}}})\), and setting this to 0.9 requires \(n=2[\frac{1.96 + 1.282}{0.3}]^2 = 234\). 1.282 is \(\Phi^{-1}(0.9)\).

To arrive at \(N_{p}\), the minimum sample size needed to achieve non-embarrassing precision in estimating \(\Delta\), keep in mind that skeptical priors for \(\Delta\) push the posterior distribution for \(\Delta\) towards zero, resulting in narrower posterior distributions. To be conservative in estimating \(N_{p}\) use a flat prior. The 0.90 credible interval is \(\hat{\Delta} \pm 1.645\sqrt{\frac{2}{n}}\). Setting the half-length of the interval to \(\delta=0.3\) results in \(n = N_{p} = 2(\frac{1.645}{0.3})^2 = 60\) per group.

At a given interim look with a cumulative difference in means of \(\hat{\Delta}\) the posterior distribution of \(\Delta | \hat{\Delta}\) is normal with variance \(\frac{1}{\tau + \frac{n}{2}}\) and mean \(\frac{n\hat{\Delta}}{n + 2\tau}\) where \(\tau\) is the prior precision, \(\frac{1}{\sigma^2}\). Here is a function to compute the posterior probability that \(\Delta > \delta\) given the current difference in means \(\hat{\Delta}\).

Code

pp <- function(delta, Deltahat, n, kappa) {

sigma <- psd(0.75, kappa)

tau <- 1. / sigma ^ 2

v <- 1. / (tau + n / 2.)

m <- n * Deltahat / (n + 2. * tau)

1. - pnorm(delta, m, sqrt(v))

}A simple Bayesian power calculation for a single analysis after \(n\) participants are enrolled in each treatment group, and considering only evidence for any efficacy, sets the probability at 0.9 that the posterior probability of \(\Delta > 0\) exceeds 0.95 when \(\Delta=\delta\). The posterior probability PP that \(\Delta > 0\) is \(\Phi(\frac{n\hat{\Delta}/\sqrt{2}}{\sqrt{n + 2\tau}})\). Making use of \(\hat{\Delta} \sim n(\delta, \frac{2}{n})\), the probability that PP > 0.95 is \(1 - \Phi(\frac{za - \delta}{\sqrt{\frac{2}{n}}})\) where \(z = \Phi^{-1}(0.95)\) and \(a=\frac{\sqrt{2}\sqrt{n + 2\tau}}{n}\).

Code

# Special case when gamma=0, for checking

bpow <- function(n, delta=0.3, kappa=0.05) {

tau <- 1. / psd(0.75, kappa) ^ 2

z <- qnorm(0.95)

a <- sqrt(2) * sqrt(n + 2 * tau) / n

1. - pnorm((z * a - delta) / sqrt(2 / n))

}

bpow <- function(n, delta=0.3, gamma=0, kappa=0.05, ppcut=0.95) {

tau <- 1. / psd(0.75, kappa) ^ 2

z <- qnorm(ppcut)

a <- n + 2. * tau

1. - pnorm(sqrt(n / 2) * ((a / n) * (gamma + z * sqrt(2 / a) ) - delta))

}

findx <- function(x, y, ycrit, dec=0) round(approx(y, x, xout=ycrit)$y, dec)

R$bayesN <- round(findx(10:500, bpow(10:500), 0.9))

bpow(R$bayesN)[1] 0.9006247Setting the Bayesian power to 0.9 results in \(196\) when \(\kappa=0.05\). Contrast this with the frequentist sample size of 234.

To understand the stringency of adding the requirement that efficacy is non-trivial to the requirement of having a very high probability that any efficacy exists, consider a sample size (45) and current mean difference (0.3) where the probability of any efficacy is 0.9. Compute the probability of non-trivial efficacy with that mean difference. The prior probability of a very large (0.75) or larger treatment effect is taken as \(\kappa=0.05\).

Code

pp( 0, 0.3, 45, 0.05)[1] 0.9017632Code

pp(0.1, 0.3, 45, 0.05)[1] 0.7790777Code

pp( 0, 0.36, 45, 0.05)[1] 0.9394288Code

pp(0.1, 0.36, 45, 0.05)[1] 0.8478874At the point at which there is over 0.9 chance of any efficacy in the right direction, the probability of non-trivial efficacy is 0.78. To achieve a probability of 0.85 would require an observed mean difference of 0.36 which raises the probability of any efficacy to 0.94.

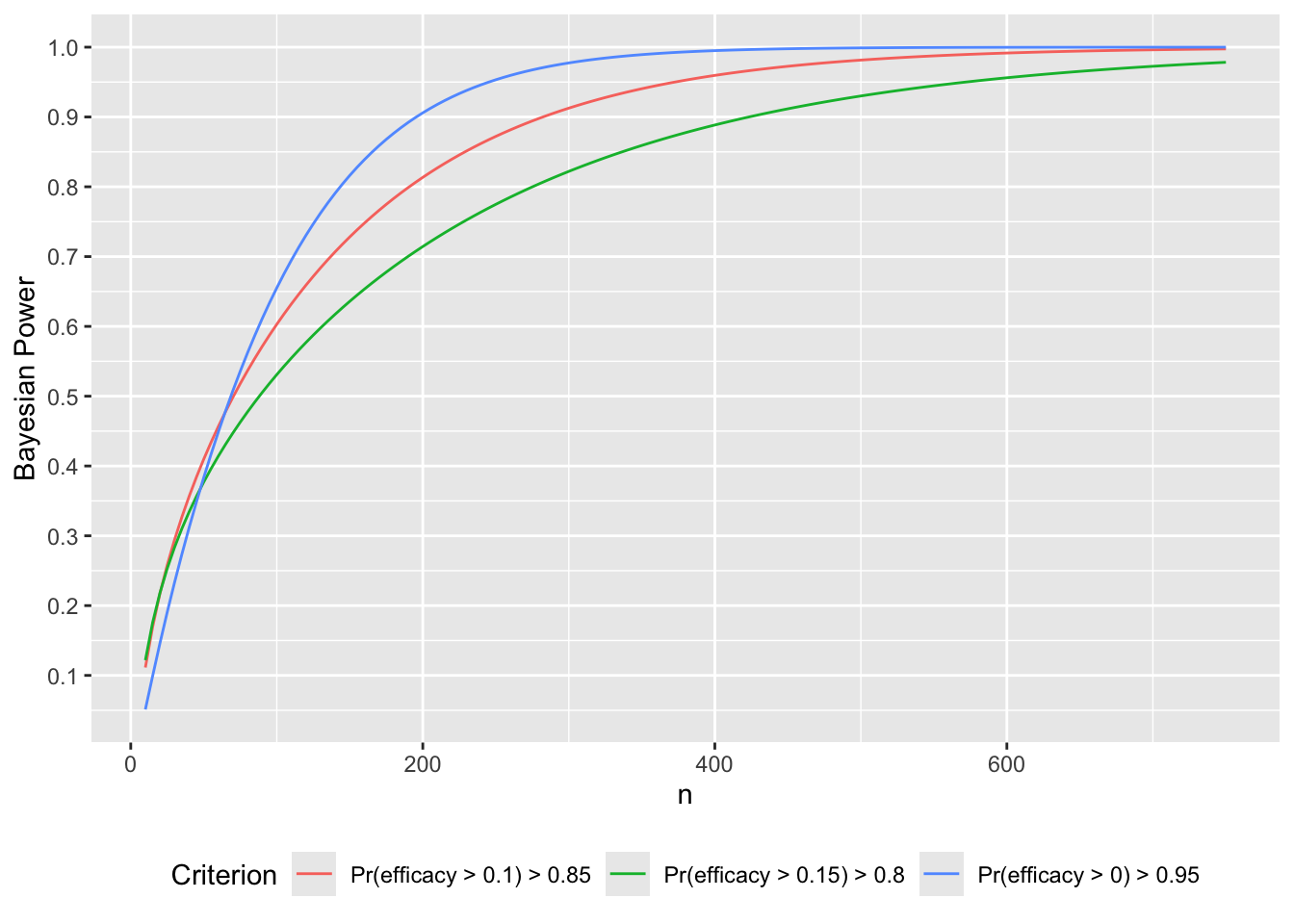

What if instead we wanted 0.9 Bayesian power to achieve a PP > 0.85 that \(\Delta > \gamma\), the threshold for a detectable treatment effect? Let’s compute over a range of \(n\) the two relevant probabilities: of getting \(P(\Delta > 0) > 0.95\) and of getting \(P(\Delta > 0.10) >0.85\), and also compute Bayesian power for \(P(\Delta > 0.15) > 0.8\).

Code

gammas <- c(0, .1, .15)

ppcuts <- c(0.95, 0.85, 0.8)

w <- expand.grid(crit=1:3, n=seq(10, 750, by=5))

setDT(w)

w[, Criterion := paste0('Pr(efficacy > ', gammas[crit], ') > ', ppcuts[crit])]

w[, p := bpow(n=n, gamma=gammas[crit], ppcut=ppcuts[crit])]

ggplot(w, aes(x=n, y=p, col=Criterion)) + geom_line() +

scale_y_continuous(breaks=seq(0, 1, by=0.1)) +

ylab('Bayesian Power') +

theme(legend.position='bottom')

Code

# Solve for n to get Bayesian power of 0.9

p <- w[, .(n=findx(n, p, 0.9)), by=.(crit, Criterion)]

navg <- if(length(R$stopn))

R$stopn[conclusion == 'non-trivial efficacy' & kappa==0.001, Mean]For a fixed sample-size study the necessary \(N\) to have a 0.9 chance that the posterior probability of more than trivial efficacy exceeds 0.85 is 282.

Later in this section the expected sample size to establishment of non-trivial efficacy will be estimated when continuous sequential analysis is allowed. This number is 122 for the most skeptical prior used. The more general expected sample size is that required on the average to reach any conclusion. This number is 138.

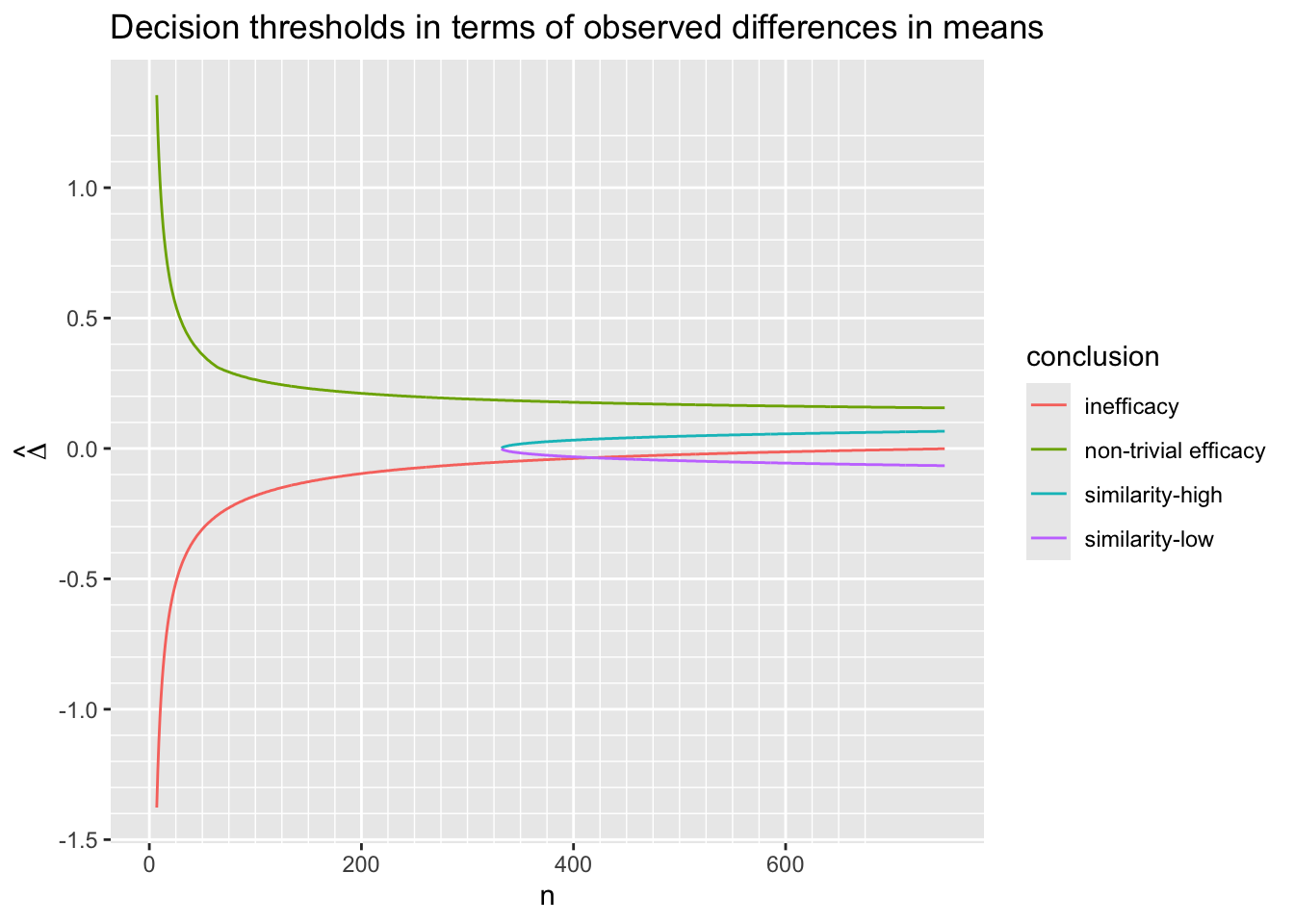

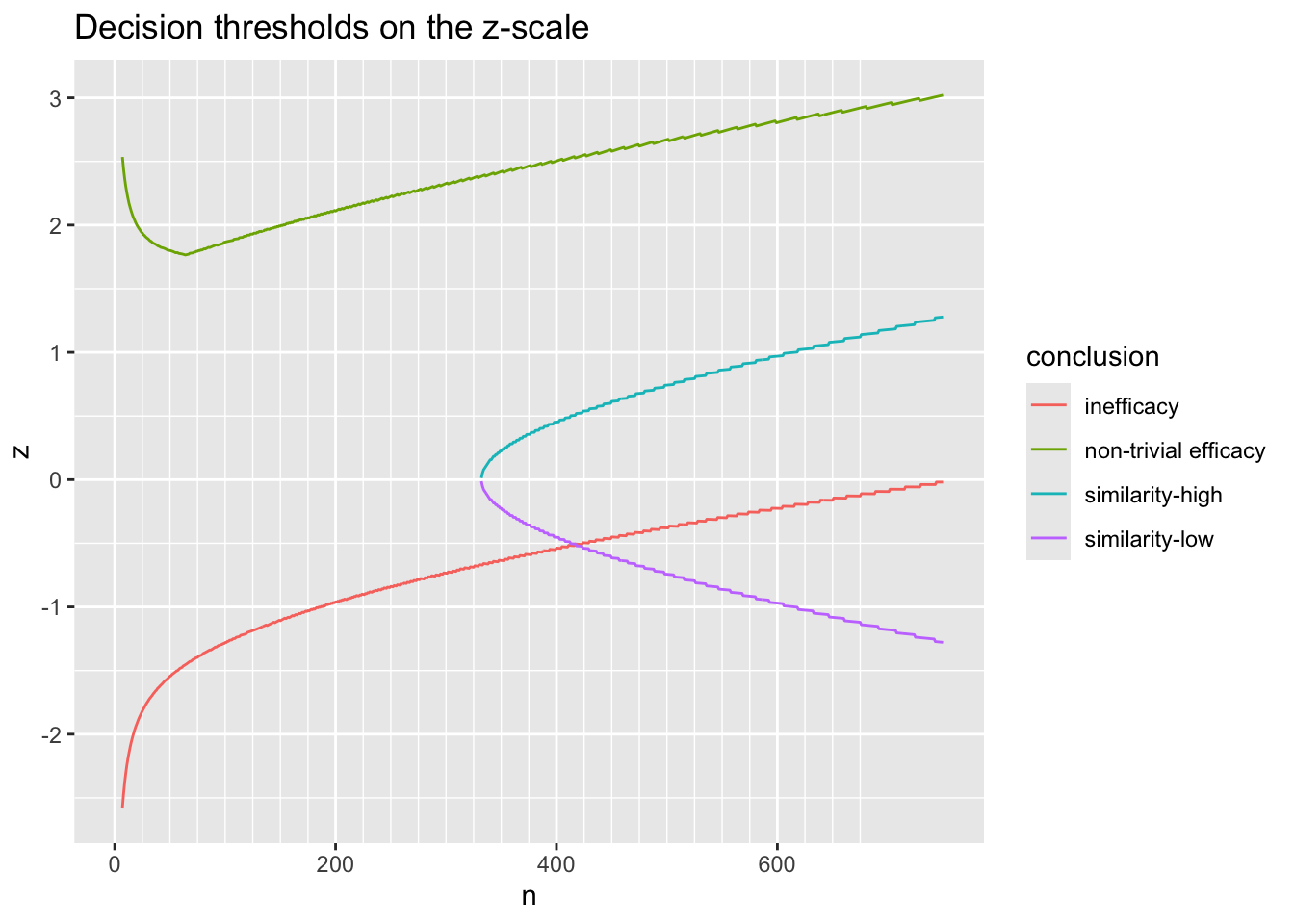

9.10.1 Decision Thresholds

Before simulating the trials, let’s compute the decision thresholds, i.e., the least impressive values of the observed difference in means \(\hat{\Delta}\) that would trigger a given conclusion, as a function of the current sample size. Let \(\hat{\Delta}\) be a regular sequence of values for each sample size. For the current purpose don’t delay any efficacy looks, and treat each of the three reasons for stopping independently. For inefficacy, the \(\hat{\Delta}\) threshold is the highest \(\hat{\Delta}\) leading to stopping for inefficacy. For non-trivial efficacy, this is the lowest \(\hat{\Delta}\) leading to stopping for non-trivial effectiveness. For similarity the threshold is an interval with the lower value being the minimum \(\hat{\Delta}\) meeting the similarity criterion and the upper limit is the maximum \(\hat{\Delta}\) meeting the criterion.

Do the calculations for one \(\kappa\).

Code

difs <- seq(-1.5, 1.5, by=0.001)

ns <- 1 : 750

d <- expand.grid(dif=difs, n=ns)

setDT(d)

thresh <- function(Deltahat, n, kappa=0.05) {

# For an ascendeing Deltahat vector and single value of n

# Compute posterior probability of any efficacy

p1 <- pp(0., Deltahat, n, kappa)

# Compute probability of non-trivial efficacy

p2 <- pp(0.1, Deltahat, n, kappa)

# Compute probability of similarity

p3 <- pp(-0.15, Deltahat, n, kappa) -

pp( 0.15, Deltahat, n, kappa)

ineff <- max(Deltahat[p2 < 0.025])

eff <- min(Deltahat[p1 > 0.95 & p2 > 0.85])

simlow <- min(Deltahat[p3 > 0.95])

simhi <- max(Deltahat[p3 > 0.95])

x <- c(ineff, eff, simlow, simhi)

x[is.infinite(x)] <- NA

list(conclusion=c('inefficacy', 'non-trivial efficacy', 'similarity-low', 'similarity-high'),

Deltahat=x)

}

w <- d[, thresh(dif, n, kappa=0.05), by=n]

R$threshold <- w

w[n %in% c(50, 100, 200, 750)] n conclusion Deltahat

<int> <char> <num>

1: 50 inefficacy -0.309

2: 50 non-trivial efficacy 0.360

3: 50 similarity-low NA

4: 50 similarity-high NA

5: 100 inefficacy -0.181

6: 100 non-trivial efficacy 0.264

7: 100 similarity-low NA

8: 100 similarity-high NA

9: 200 inefficacy -0.096

10: 200 non-trivial efficacy 0.211

11: 200 similarity-low NA

12: 200 similarity-high NA

13: 750 inefficacy -0.001

14: 750 non-trivial efficacy 0.156

15: 750 similarity-low -0.066

16: 750 similarity-high 0.066Code

ggplot(w, aes(x=n, y=Deltahat, col=conclusion)) + geom_line() +

scale_x_continuous(minor_breaks=seq(0, 675, by=25)) +

scale_y_continuous(breaks=seq(-1.5, 1.5, by=0.5),

minor_breaks=seq(-1.5, 1.25, by=0.1)) +

ylab(expression(hat(Delta))) +